Add Support for Amazon Bedrock

Updated

User can add an AI provider called Amazon Bedrock under Provider and Model Settings. There are two different ways to add Amazon Bedrock: through Sprinklr, where it is managed by a DP; by using Bring your own Key (BYOK). Once added, a provider card corresponding to Amazon Bedrock gets added under AI providers.

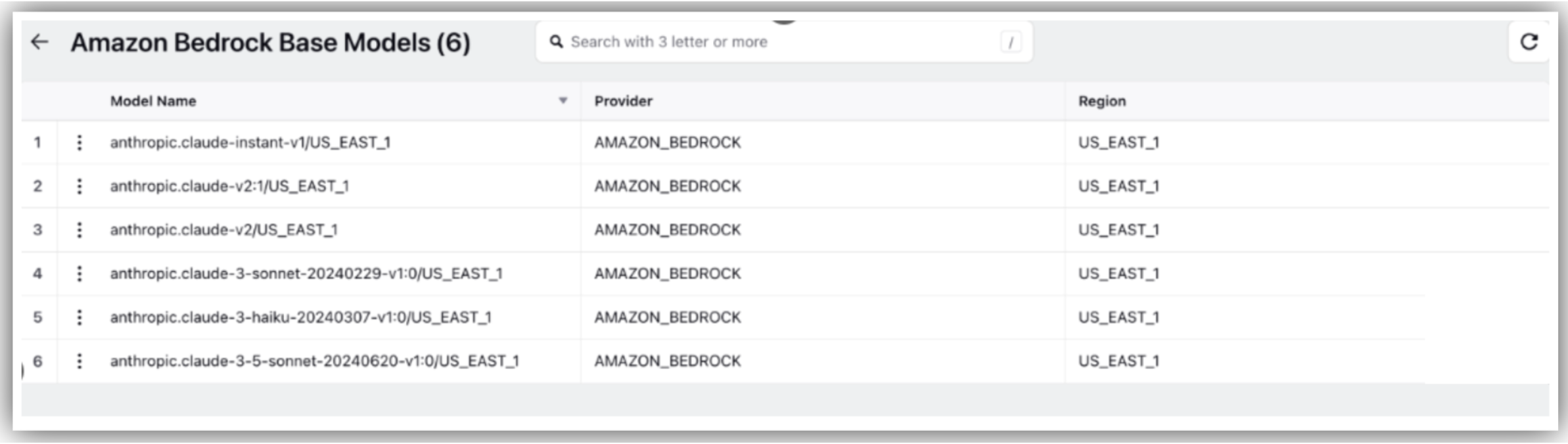

Clicking on Show Models, you can view the supported base models, which you can use across different use-cases added in AI+ Studio.

Enablement note: To learn more about getting this capability enabled in your environment, please work with your Success Manager.

To get information on navigating AI Studio, refer Accessing AI Studio.

Integrating Amazon Bedrock

You can use the following two ways to integrate Amazon Bedrock with Sprinklr:

LLM using your own key

By using Sprinklr provided LLM

Adding Amazon Bedrock using BYOK

A user can add Amazon Bedrock by using Bring Your Own Key (BYOK). Here are the steps to add a Provider using this method:

Click Add Provider under AI Providers.

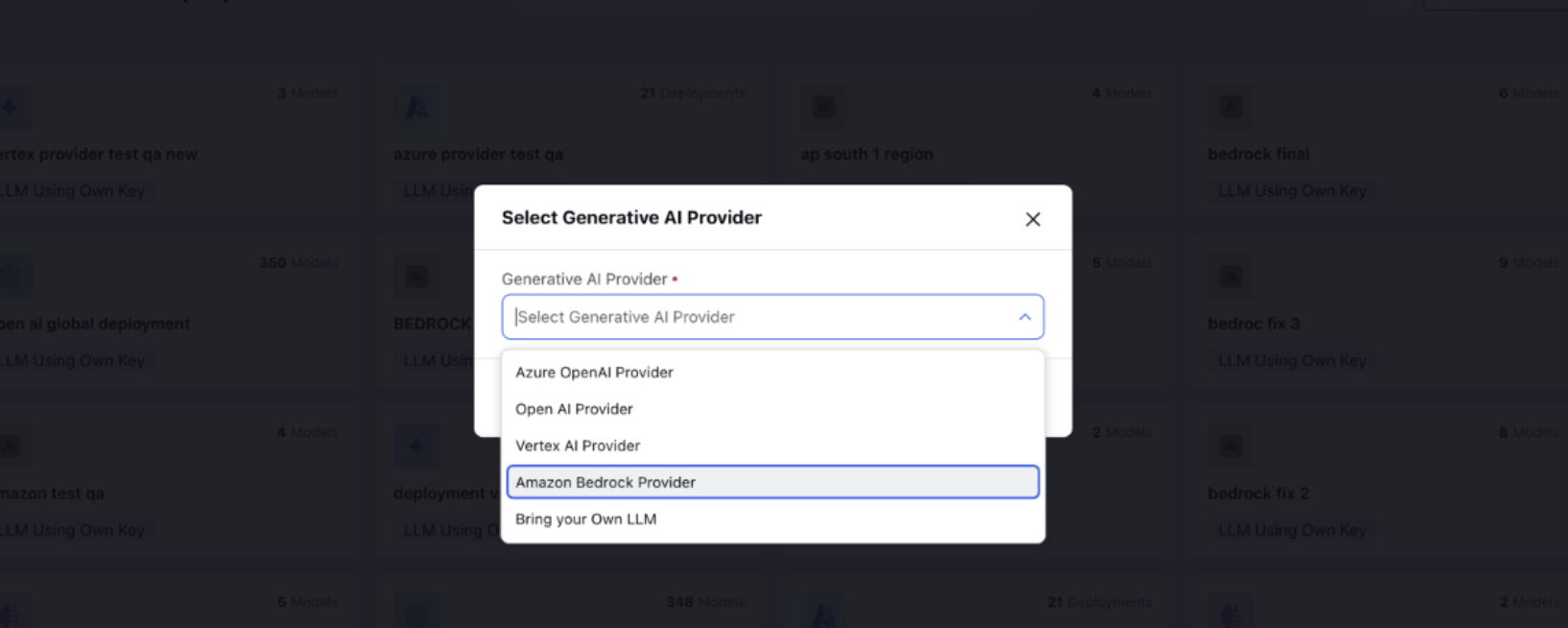

Select Amazon Bedrock from the dropdown in Select Generative AI Provider screen.

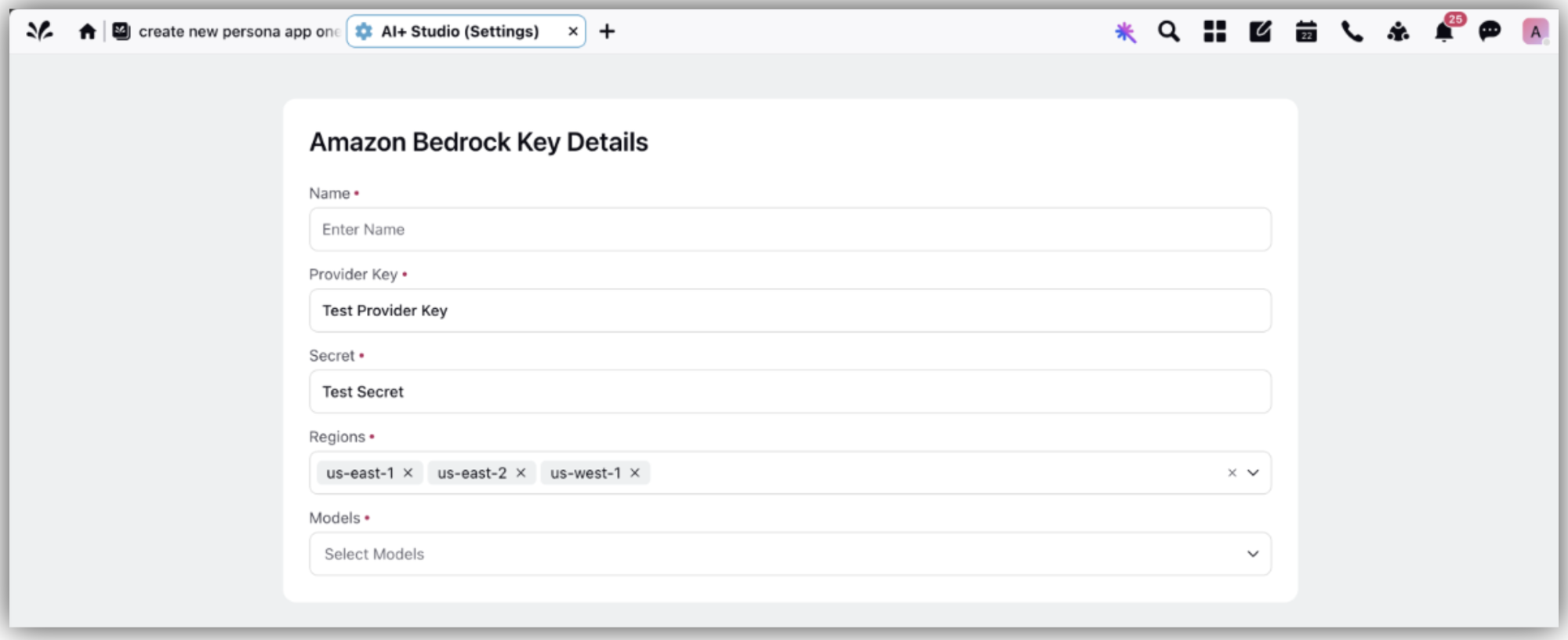

3. On clicking Next, you can view the Amazon Bedrock Key Details form.

4. Click Save after filling the different fields in the Key Details form.

5. Models: The customer can select the models that they want to integrate into AI+ Studio.

6. Fallback Model: After entering the key details, choose a fallback model. If it’s the first provider added, this Fallback Model will be used as the default deployment for all use-cases.

7. Amazon Bedrock Provider card gets added under Providers, with a tag saying LLM using Own Key.

Show Models

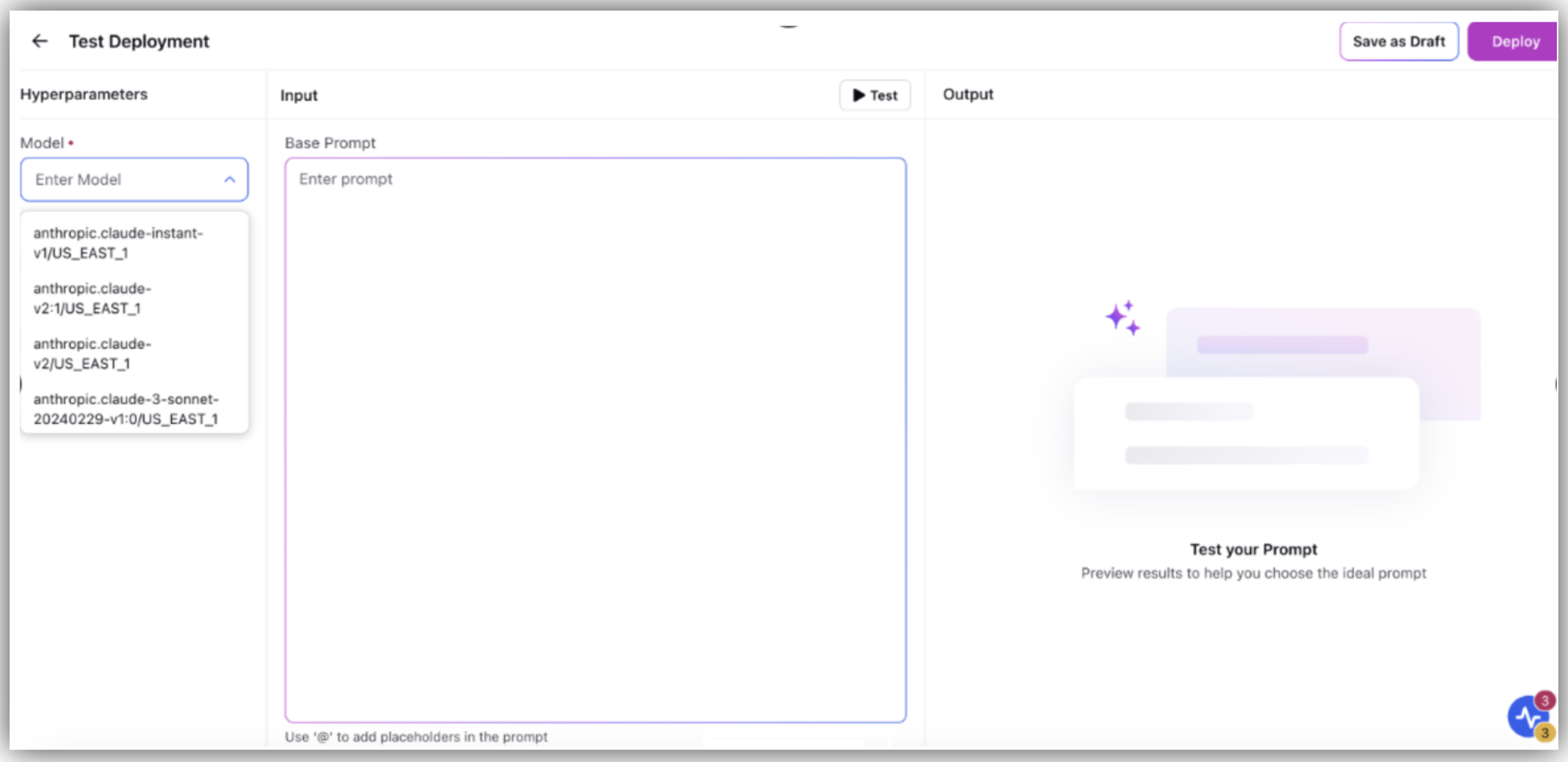

8. Click Show Models on the Provider card. Here you can view all Base Models listed under Base Models Tab.

Adding Amazon Bedrock using Sprinklr provided LLM

If a user has chosen Sprinklr OpenAI Provider, it can be viewed in the AI Providers screen without any manual addition. A Provider via Sprinklr is controlled by a Dynamic Property (DP). On hovering over the card you can see Show Models. Clicking on Show Models takes you to the Base Model Screen from where you can manage the various Base Models.

Using Vertex Model for Deployments

Once a model is added it can be used across different use cases that come under Deployed Use Cases Section.