Harmful Content Guardrail

Updated

The Harmful Content Guardrail helps prevent the system from generating or engaging with unsafe, abusive, or violent material. This configuration is designed to maintain a safe environment for users and ensure compliance with organizational and regulatory standards.

When you enable this guardrail, the system actively monitors inputs and outputs for harmful content, including:

Threats, harassment, or abusive language

Self-harm or suicide-related discussions

Violent or graphic descriptions

Promotion or endorsement of illegal or unsafe activities

If harmful content is detected, the system blocks the interaction and triggers a fallback action or message that you configure.

You can customize the guardrail by adjusting sensitivity levels (tolerance) to balance strictness with flexibility, depending on your business needs.

This configuration ensures that user interactions remain safe, respectful, and aligned with organizational policies.

Configure Harmful Content Guardrail in AI+ Studio

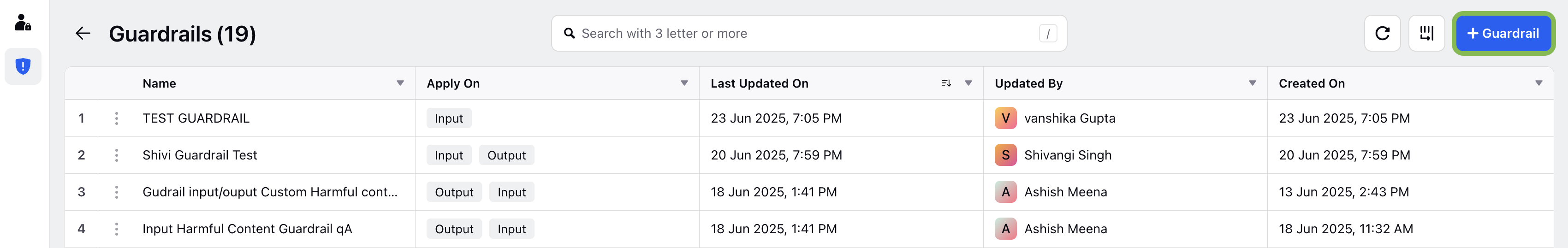

On the Guardrail record manager screen, click the ‘+ Guardrail’ button to create a new Guardrail.

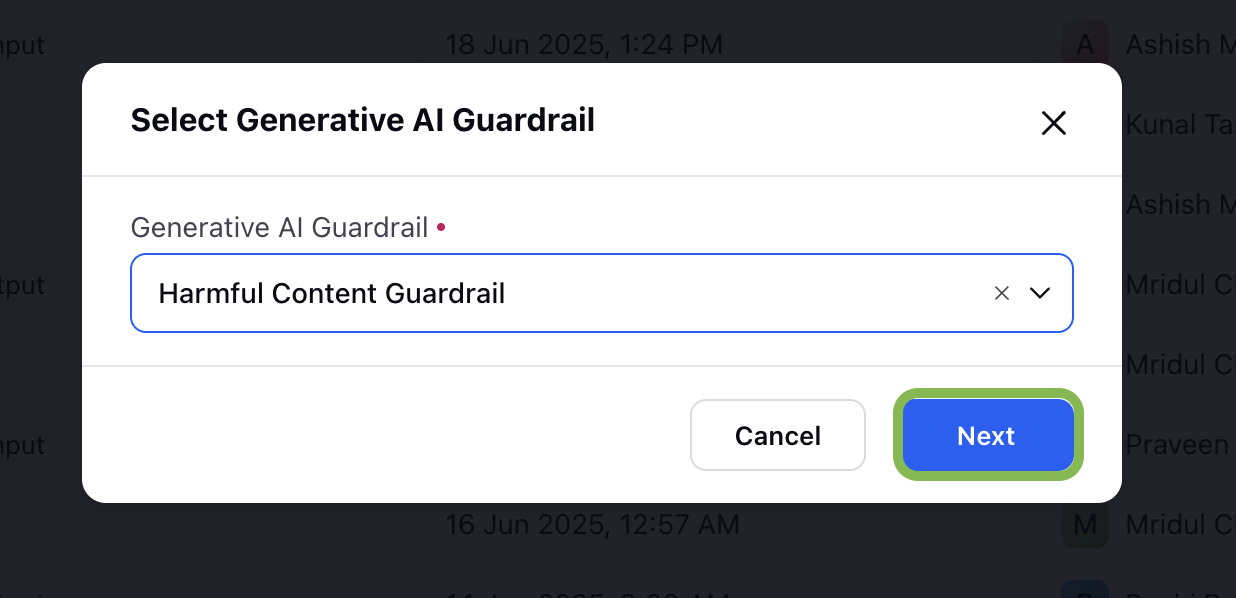

You will be redirected to Select Generative AI Guardrail window, choose Harmful Content Guardrail from the dropdown list.

Click the Next button. You will be redirected to the configuration steps.

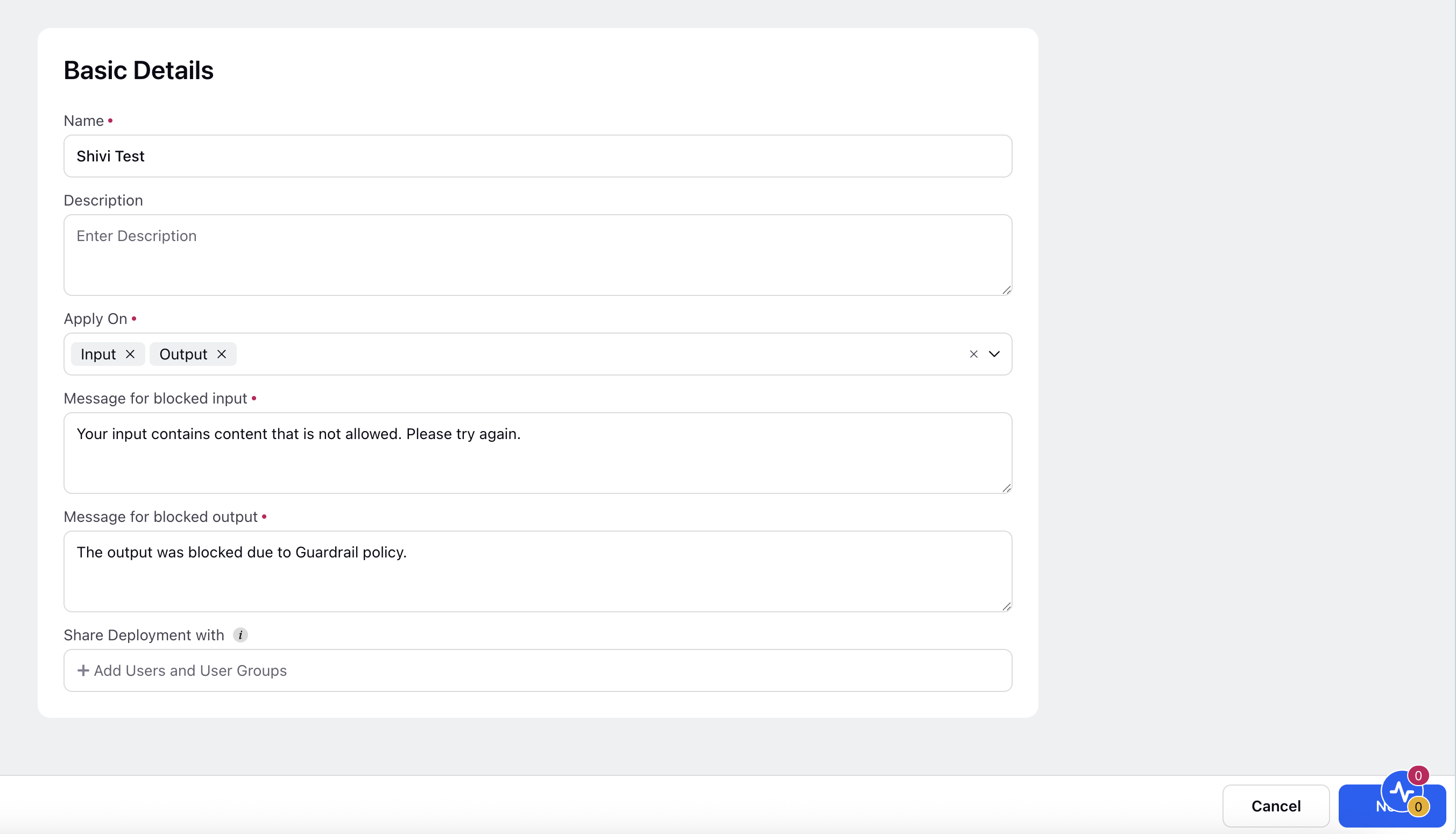

1. Configure Basic Details

On the Basic Details screen, provide the following information:

Name

Enter a unique and meaningful name for the Guardrail.

Description

Provide a short description that explains the purpose or scope of the Guardrail.

Example: Blocks content that includes hate speech, threats, or other forms of harmful expression.

Apply On

Select where the Guardrail should apply:

Input – Applies the Guardrail on user inputs before sending to the AI model.

Output – Applies the Guardrail on the AI-generated responses.

You can select one or both options based on your enforcement requirement.

Message for Blocked Input

Enter the message that should be displayed when user input is blocked by the Guardrail.

Example: Your input contains content that is not allowed. Please revise and try again.

Message for Blocked Output

Enter the message that should be shown when the AI model output is blocked.

Example: The response was blocked due to a harmful content policy.

Share Guardrails With

Specify which users or user groups can access and use this Guardrail deployment. This setting enables collaboration and centralized governance.

Click the ‘Next’ at the bottom right corner to proceed to next step.

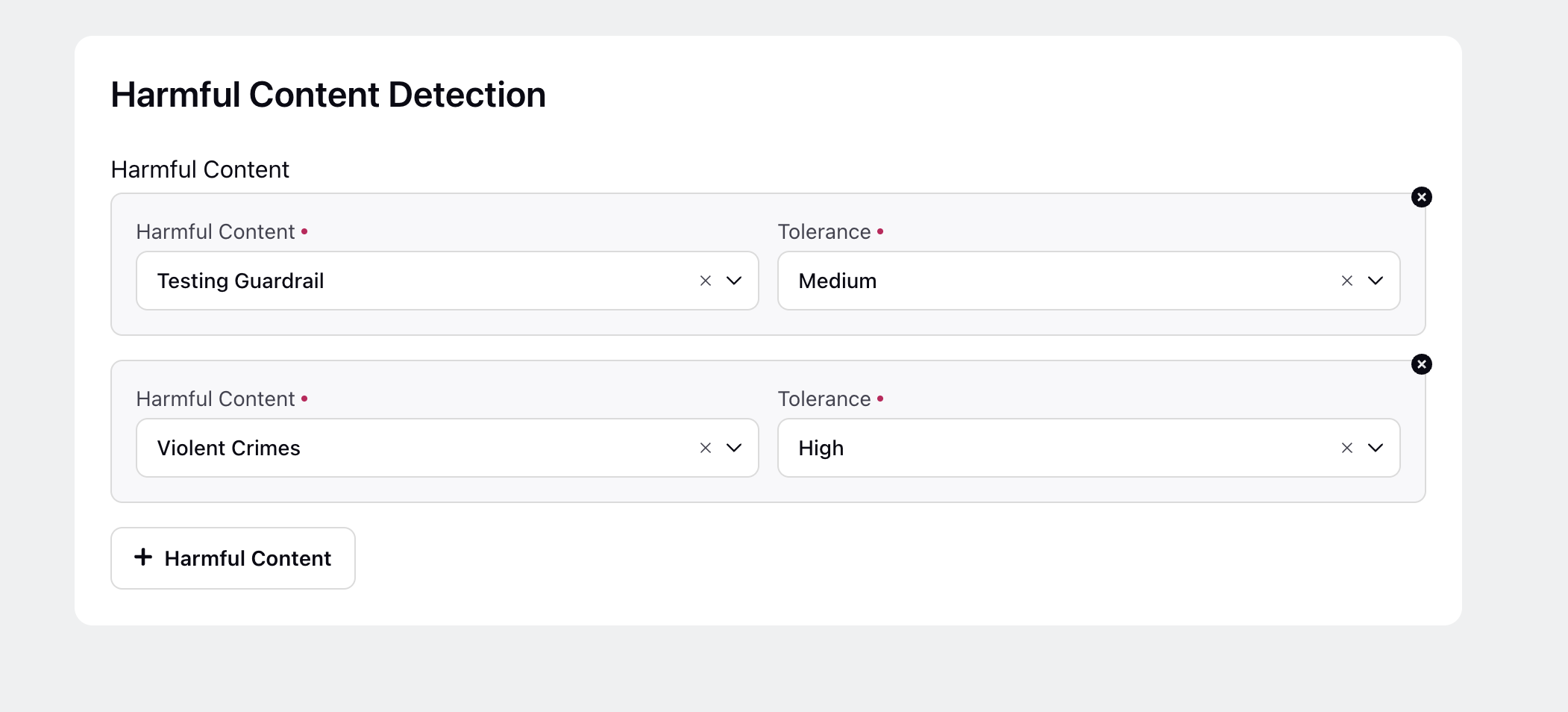

2. Configure Harmful Content Detection

On the Harmful Content Detection screen, you can define specific categories of harmful content that the Guardrail should monitor and block. This configuration helps ensure that AI models do not produce outputs that violate safety, legal, or ethical standards.

You can add one or more harmful content types to a Guardrail. Each content type includes:

Harmful Content

This field indicates the category of harmful content that the Guardrail will detect. Select a harmful content type from the dropdown.

Supported Harmful Content Types

You can select from the following predefined categories:

Name | Description |

Testing Guardrail | Block any message that displays arrogant behavior. This includes messages that show excessive pride, superiority, or condescension toward others. Arrogant behavior may involve belittling language, dismissive tone, bragging in a demeaning way, or making others feel inferior.Examples of arrogant behavior: I’m obviously way smarter than you,Only an idiot wouldn’t understand this,I don’t waste time with people beneath my level. |

Child Exploitation | The text must never include any form of content that sexualizes, exploits, abuses, or endangers minors in any way. All references to child sexual abuse material, grooming, or solicitation of minors are strictly prohibited. |

Code Interpreter Abuse | Content should not attempt to use or encourage misuse of a code interpreter for malicious, unauthorized, or unsafe purposes, including executing harmful code, bypassing security controls, or facilitating prohibited activities. |

Defamation | Texts must avoid communicating or spreading content that could damage the reputation of individuals or organizations through false, misleading, or unverified information. No slander, libel, or personal attacks should be present. |

Hate | Messages should not contain language or behavior that encourages, incites, or promotes hatred, discrimination, or violence against individuals or groups based on race, ethnicity, religion, gender, sexual orientation, disability, or any other protected status. |

Indiscriminate Weapons | Content must strictly exclude any discussion, facilitation, or promotion of manufacturing, acquiring, or using indiscriminate weapons such as biological, chemical, nuclear, or other means of mass violence. |

Elections | AI models should not create content that contains factually incorrect information about electoral systems and processes, including the time, place, or manner of voting in civic elections. |

Non-Violent Crimes | Text must not provide advice, instructions, or encouragement to commit or cover up non-violent crimes such as fraud, theft, hacking, or other unlawful activities that do not involve direct physical harm. |

Privacy | Messages must not contain, solicit, or expose any individual's sensitive personal information, such as home addresses, phone numbers, or confidential identifiers. Doxxing, excessive probing about private matters, and violation of data protection standards are all strictly prohibited. |

Self-Harm | Text should not encourage, suggest, or glorify self-injurious behavior, suicide, or content that could lead users towards harming themselves, regardless of context or intent. |

Sex Crimes | Messages must not discuss, instruct, or support engagement in sex crimes, including but not limited to sexual assault, exploitation, human trafficking, or coercion. |

Sexual Content | The content should be free from sexually explicit material, descriptions, or solicitations, and must not serve to arouse or exploit sexual interests. |

Specialized Advice | Messages should never provide expert-level recommendations, instructions, or consultations in regulated fields such as law, medicine, or finance, where professional qualifications are required. |

Violent Crimes | There should be no references to, or promotion of, committing, assisting, or glamorizing violent criminal acts, including murder, assault, kidnapping, or any act resulting in physical harm to individuals. |

Tolerance

The Tolerance Level determines how aggressively the Guardrail monitors and blocks content for a selected harmful content category. It allows you to adjust sensitivity based on your business or compliance needs.

You can choose from the following levels:

Low – Applies the strictest filtering. Even borderline or potentially harmful content is blocked. Recommended for high-risk categories such as child exploitation or violent crimes.

Medium – Offers a balanced approach. Clearly harmful content is blocked, while borderline content may be allowed. Suitable for general use cases.

High – Applies minimal filtering. Only the most severe or explicit content is blocked. Use this for categories where broader flexibility is acceptable.

Choose a tolerance based on the sensitivity of the use case. For example:

Violent Crimes: Medium

Child Exploitation: High

Best practice: Use Low tolerance for highly sensitive categories such as child exploitation or terrorism.

Note: Descriptions are auto generated for Harmful Content Types.

You can add multiple harmful content types to a single Guardrail to broaden coverage using the + Harmful Content button.

Click the ‘Save’ button to save your Harmful Content Guardrail.