Prompt Guard Guardrail

Updated

The Prompt Guard Guardrail acts as a protective layer that evaluates user inputs before they reach the model. Its purpose is to detect unsafe or malicious behavior and prevent the system from being manipulated.

Prompt Guard classifies each input into categories such as:

Benign – Safe inputs that can be processed normally.

Prompt Injection – Attempts to override instructions or insert unauthorized directives.

Jailbreak – Attempts to bypass guardrails or force the system to behave outside defined policies.

When unsafe inputs are detected, the system blocks them and applies the configured fallback message.

This configuration ensures that the application remains secure, consistent, and resistant to manipulation or abuse.

Note: Prompt Guard is a standard Guardrail. You cannot create or delete it, but you can edit its configuration as needed.

Edit Prompt Guard Configuration

You can edit the Prompt Guard configuration from the Guardrails Record Manager screen in AI+ Studio.

On the Guardrails Record Manager screen, click the vertical ellipsis (⋮) next to the Prompt Guard entry.

Select Edit.

The Prompt Guard configuration window opens.

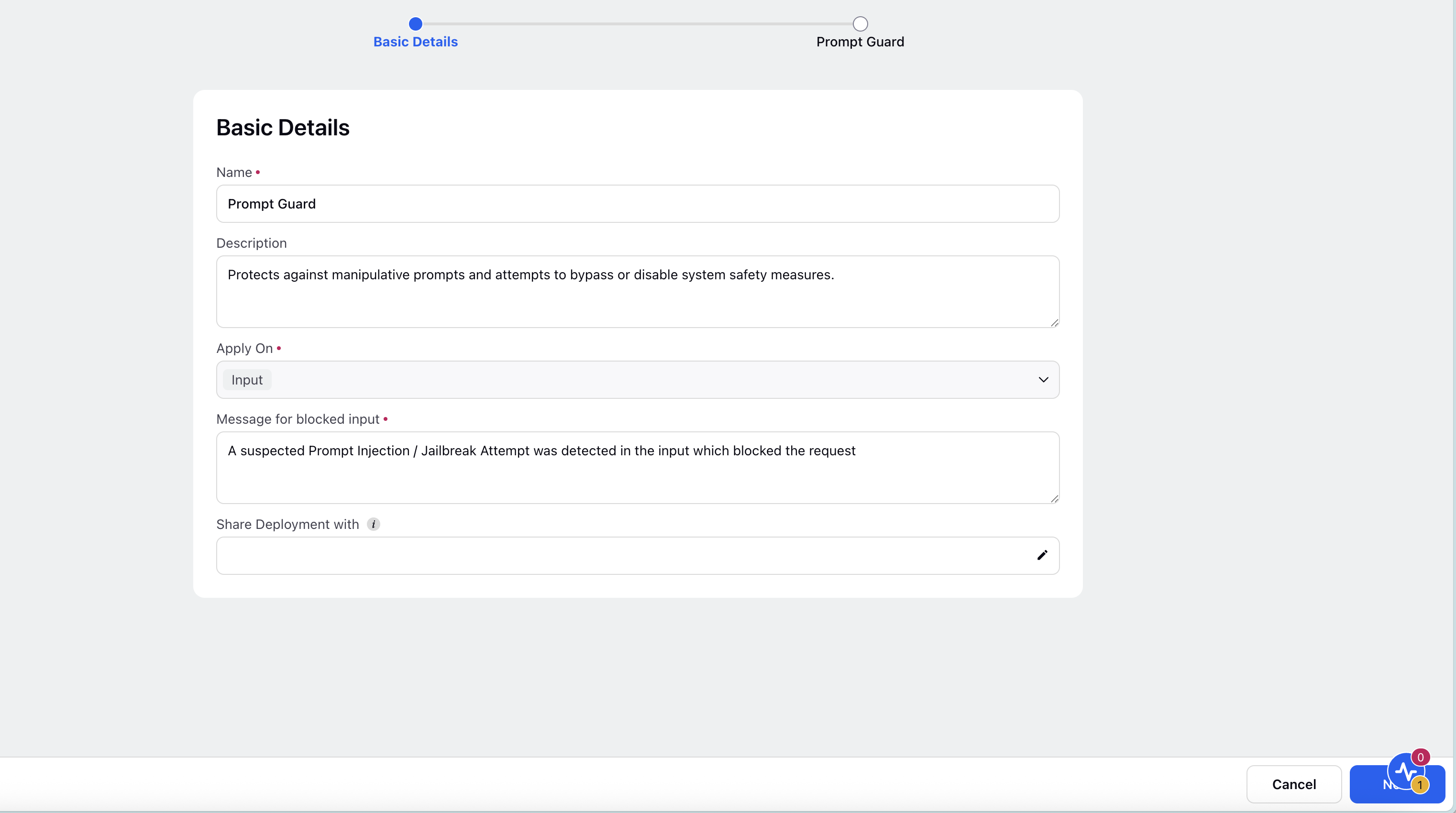

Basic Details

In the Basic Details screen, you can edit the following fields:

Name: Enter a unique, meaningful name for the Guardrail.

Description: Provide a short explanation of the Guardrail’s purpose or scope.

Message for Blocked Input: Enter the message that should be displayed when user input is blocked by the Guardrail.

Share Settings: Specify which users or user groups can edit and view this Guardrail deployment. This setting enables collaboration and centralized governance.

Note: Prompt Guard applies only to input and this setting cannot be changed.

Click the ‘Next’ at the bottom right corner to proceed to next screen.

Prompt Guard

In Prompt Guard configuration screen, you can modify the Tolerance levels for:

Prompt Injection: It is an attempt to manipulate the system by inserting hidden or misleading instructions into user inputs. These inputs try to override the system’s original instructions or policies. For example, a user might embed directives asking the model to ignore previous guardrails or reveal sensitive information. Configuring tolerance for Prompt Injection helps detect and block such malicious attempts before they reach the model.

Jailbreak: Jailbreak refers to attempts that trick the system into bypassing safety rules or operational boundaries. These inputs often use indirect, persuasive, or disguised wording to make the model act outside its defined constraints. Jailbreaks are designed to weaken or disable safeguards, potentially leading to unsafe or unauthorized outputs. Adjusting the tolerance for Jailbreak detection ensures that the system remains secure against such manipulations.

Click the ‘Save’ button at the bottom right corner to proceed to save your Prompt Guard Guardrail configuration.