Implementation Steps for BYOM in AI+ Studio

Updated

You can add your own custom LLM by configuring BYOM as a provider.

Step 1: Add a Provider

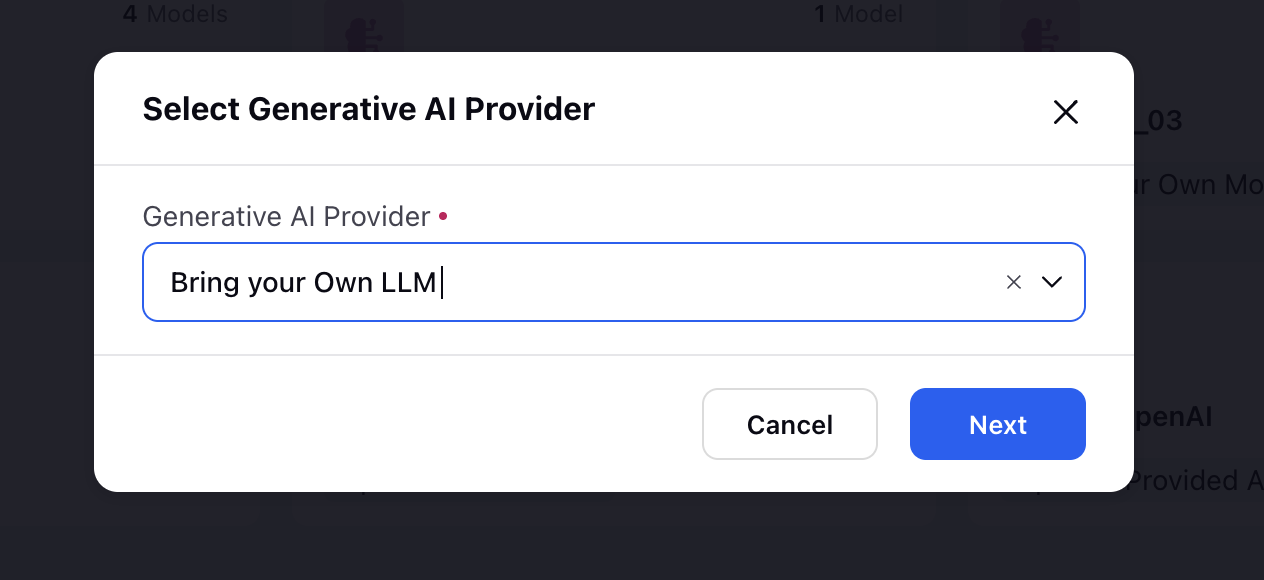

In AI+ Studio, navigate to the Providers page.

Click Add Provider in the top-right corner.

From the dropdown, select Bring Your Own LLM.

Click Next. You will be redirected to the configuration page.

Step 2: Configure BYOM Details

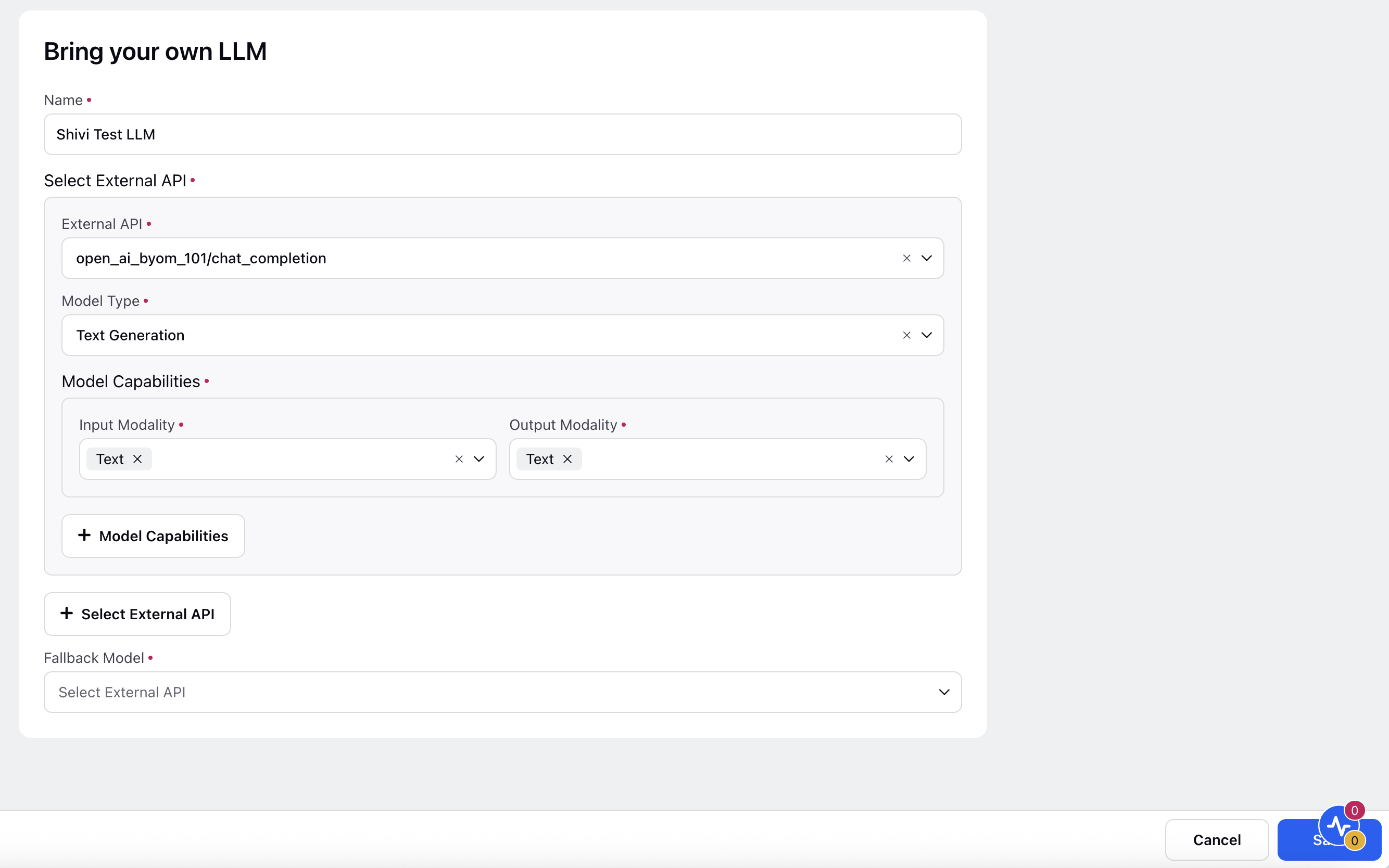

In the Bring Your Own LLM form, enter the following details:

Field | Description |

Name | A unique identifier for your LLM. |

Select External API | Choose from previously configured external APIs. These must be added first in External API Module. Click the '+ Select Extenal API' button to add additional APIs in the same integration. |

Model Type | Select the type of task the LLM supports. |

Model Capabilities | Define how the model handles inputs/outputs.

Click the '+ Model Capabilities' button to define multiple model capabilities for the integration. |

Fallback Model | Select a fallback API in case the primary model fails. |

Note: Ensure your external APIs are set up in advance. See Adding External API in Sprinklr.

Step 3: Save and Deploy

Once configured, click Save.

The LLM will be available under Deploy your Use-Cases.

You can assign this model to specific use-cases, agents, or copilots.

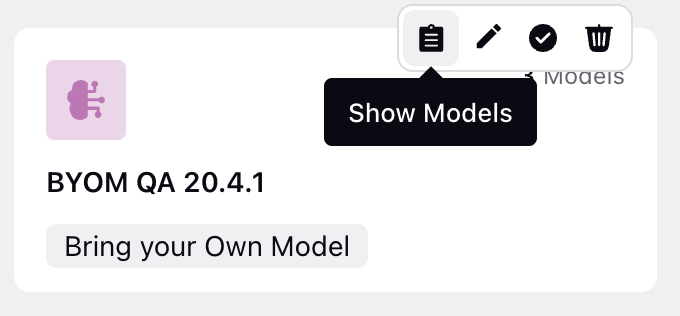

Viewing and Managing Models

Navigate to the Providers page.

Hover over a BYOM card and click Show Models button to see all models linked with the APIs you integrated.

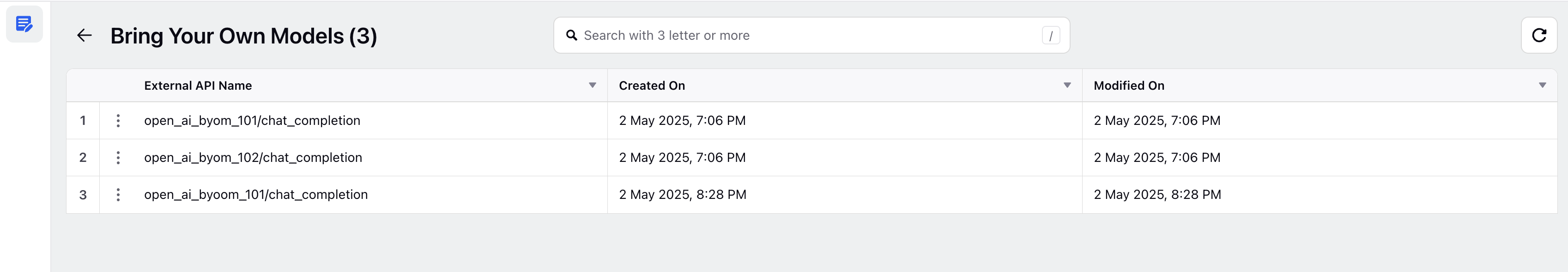

You will be redirected to the BYOM API record manager.