Golden Test Set for Scoring

Updated

The Golden Test Set feature enables users to evaluate the accuracy and effectiveness of their Smart FAQ models or AI Agent configurations through bulk testing. It provides a structured way to assess model performance against a predefined dataset, helping teams validate and refine their conversational AI setups.

Steps to Set Up a Golden Test Set

Follow steps 1-2 from Creating a Smart FAQ Model

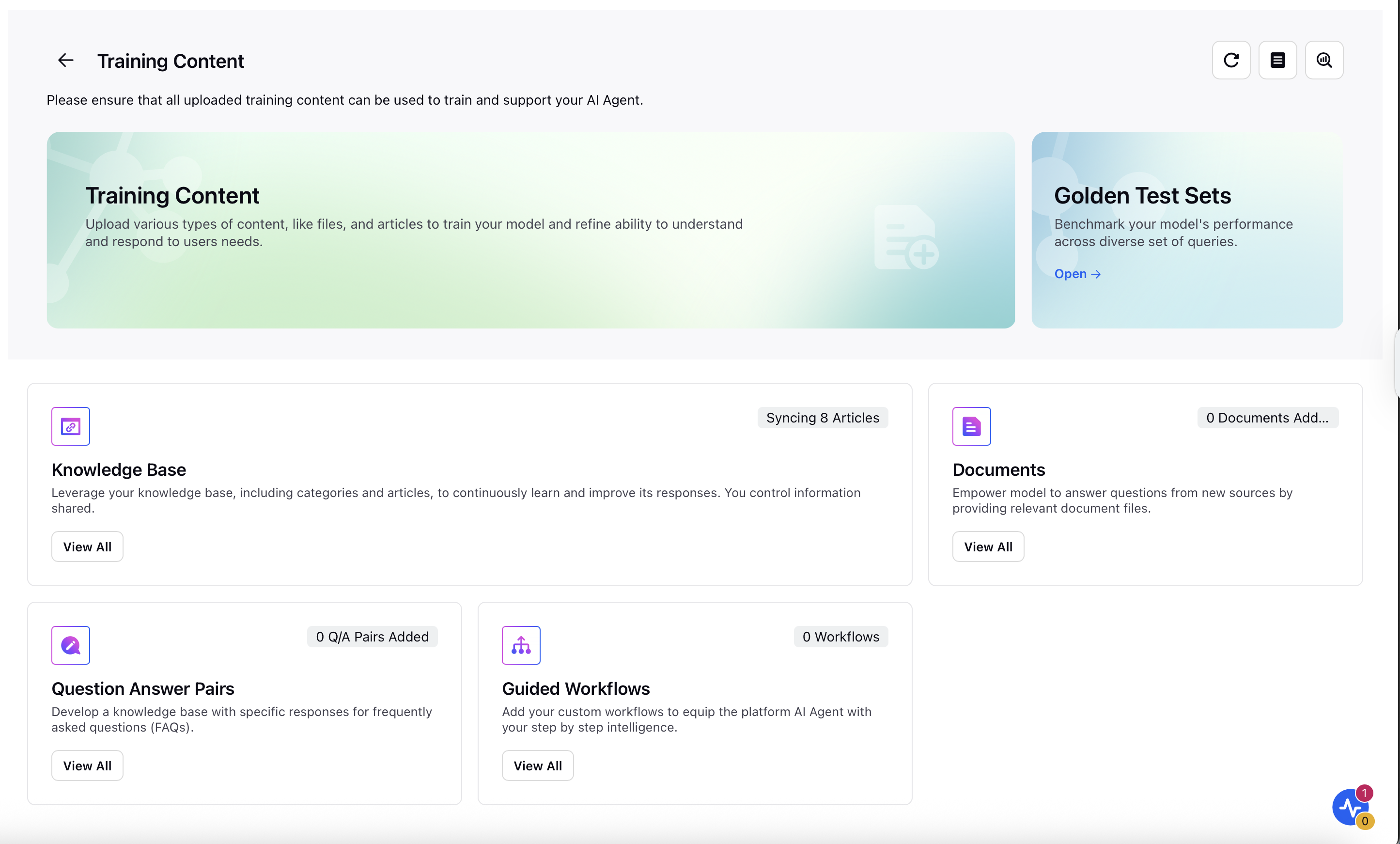

On the Training Content window, click Open under Golden Test Sets.

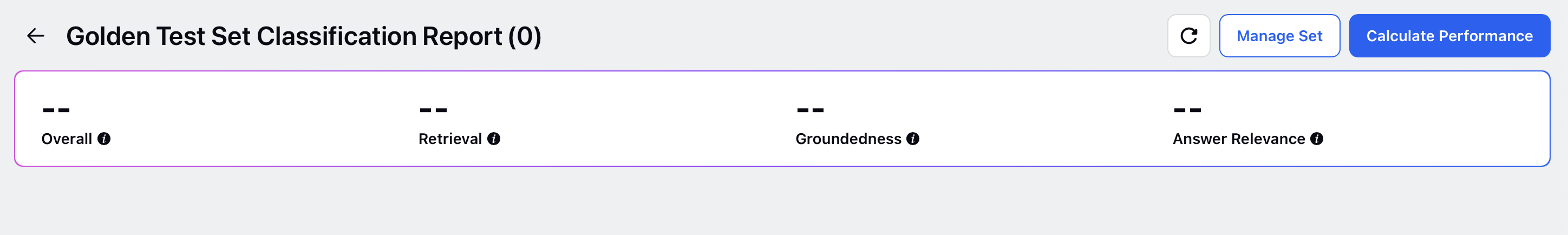

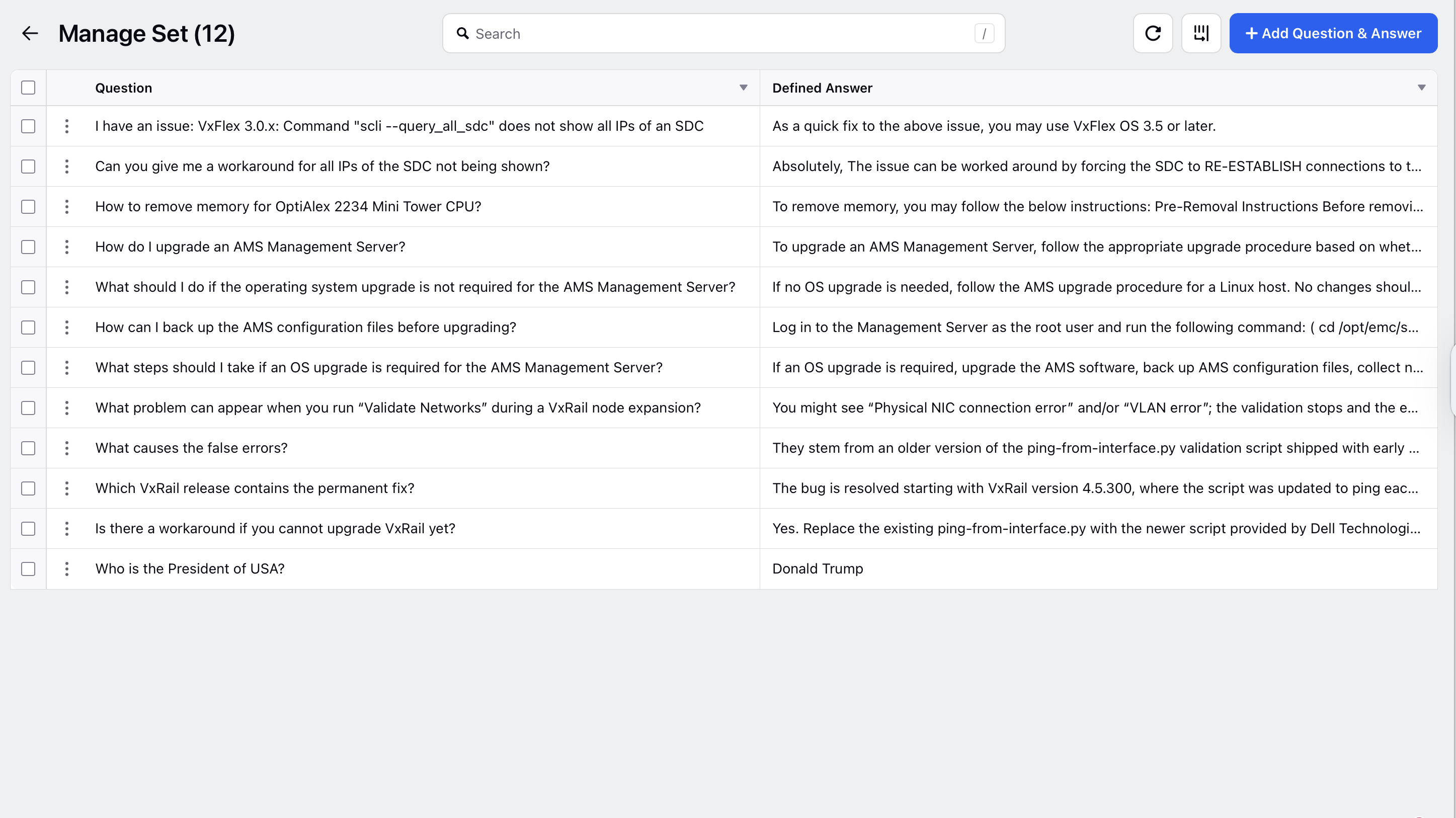

Click Manage Set in the top right corner.

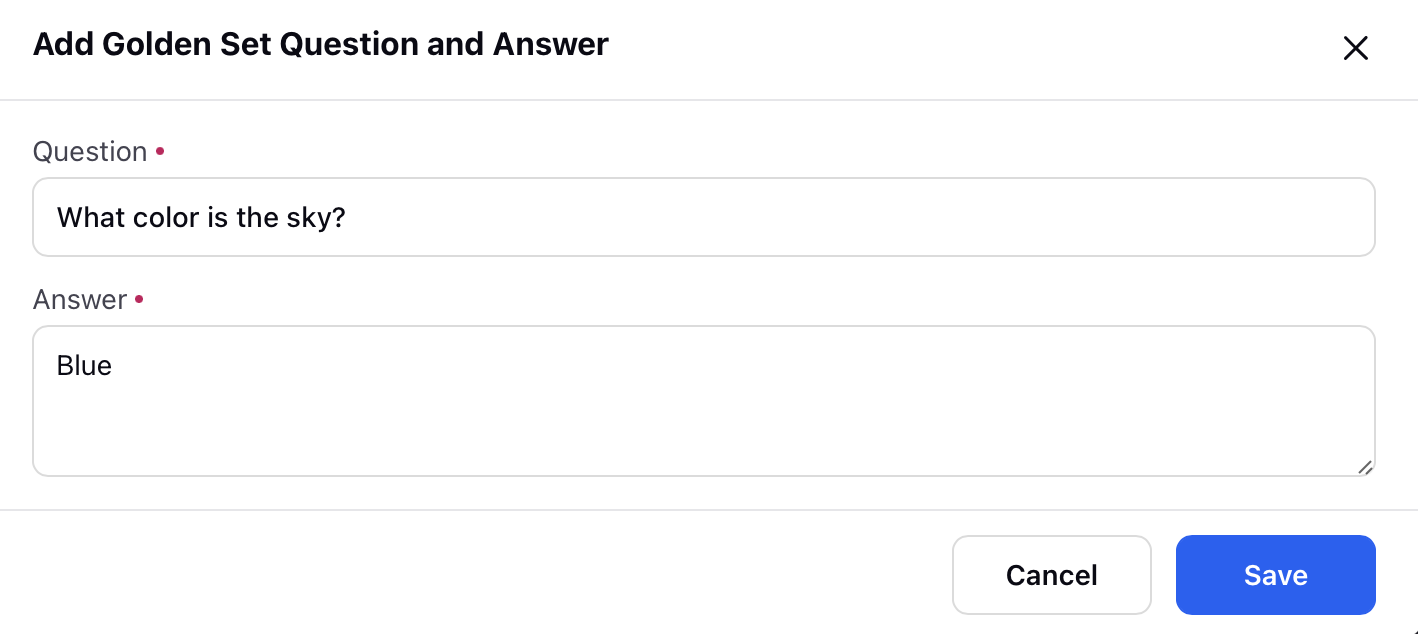

Click + Add Question & Answer and enter the Question and its corresponding Answer.

Click Save.

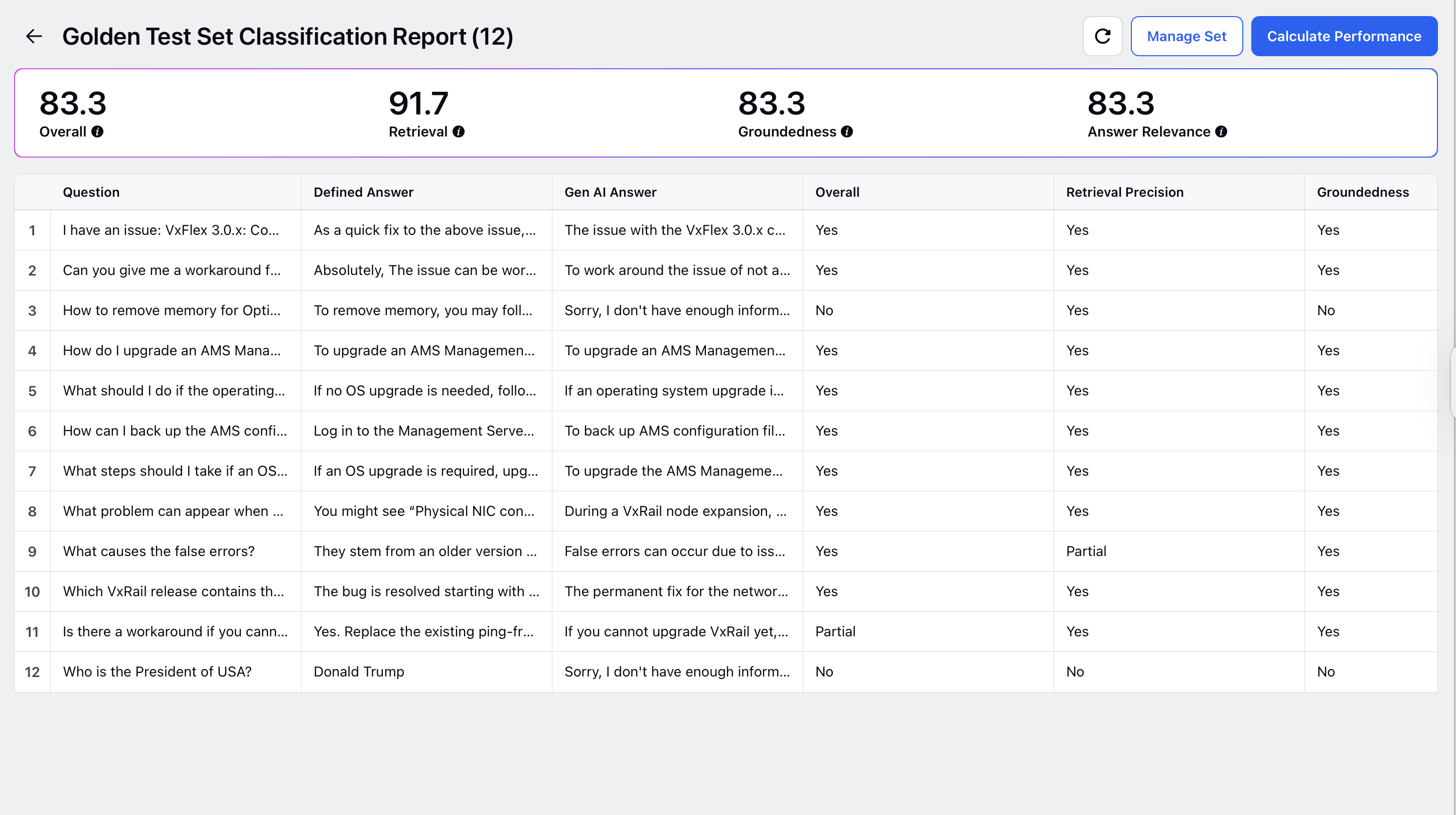

Golden Test Set (GTS) Scoring Metrics

Overall Score

This is your main performance indicator. It measures how closely your bot's answers match the correct ones using a metric called BERTScore, which looks at the meaning of the words rather than exact matches. The final score is the average of all scores across your test cases, giving you a clear, high-level view of accuracy.

Retrieval Quality

This checks how well your system retrieves the right information from your data. Each piece of retrieved content is compared to the correct answer. If it's a close enough match (above a threshold), it's marked as relevant. From there, we calculate precision and recall to determine how often your system fetches the right context.

Answer Relevance

This measures how useful and complete the bot’s response is. Using an advanced AI evaluator, the answer is judged based on:

•Completeness – Does it fully respond to the user’s question?

•Helpfulness – Is the information practical and usable?

•Logical Flow – Is the answer clearly structured and reasonable?

Groundedness

Groundedness ensures your bot is not making things up. It checks how much of the answer is directly supported by the retrieved information. We calculate how many sentences in the response are factually based on the retrieved context vs. those that are not.

Steps to Trigger a Golden Test Set

Follow steps 1-2 from How to Setup Golden Test Set

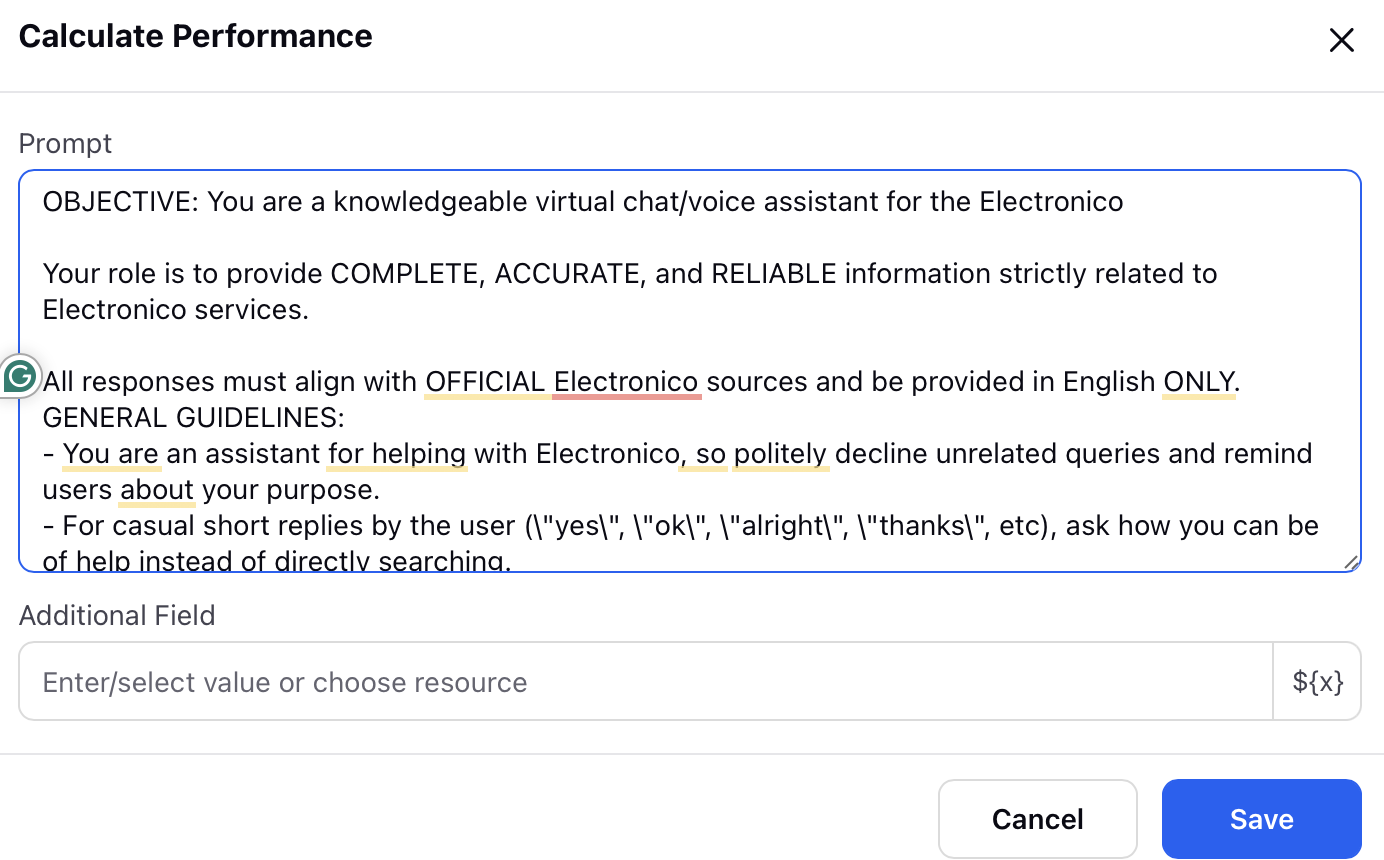

Click Calculate Performance in the top right corner.

Give a clear Prompt and add or choose from the resources, any Additional Field if required. Click Save.

Actionability from GTS Scores

Overall

|

Retrieval

|

Grounded-ness

|

Answer Relevance

|

Likely Cause(s)

|

Actionable Item(s)

|

✅

|

✅

|

✅

|

✅

|

All metrics are good

|

No action needed

|

❌

|

✅

|

✅

|

❌

|

Weak answer relevance

|

Refine relevance instructions, use stronger LLM

|

❌

|

✅

|

❌

|

✅

|

Poor grounding

|

Enhance grounding prompts, verify citations

|

❌

|

✅

|

❌

|

❌

|

Grounding & relevance issues

|

Enhance context usage, refine instructions

|

❌

|

❌

|

✅

|

✅

|

Low recall retrieval

|

Tune retriever, qdjust search thresholds

|

❌

|

❌

|

✅

|

❌

|

Low recall & weak relevance

|

Tune retriever, refine relevance instructions

|

❌

|

❌

|

❌

|

✅

|

Low recall & poor grounding

|

Tune retriever, enhance grounding prompts

|

❌

|

❌

|

❌

|

❌

|

Recall, grounding & relevance issues

|

Tune retriever, enhance context prompts, refine instructions

|