Understanding Generative AI Responses: AI Agent

Updated

In this article, we will deconstruct the Generative AI response to understand the final output, sources fetched and referred and the metadata that we receive from the LLM.

Process for Generating a Response by the LLM

User query → Sprinklr Rewords the query before performing search → Sprinklr performs search on knowledge content to retrieve “context“ → Sprinklr passes user’s query, context and prompt containing instructions to LLM → LLM generates response, and tells which sources were used.

Access the Response

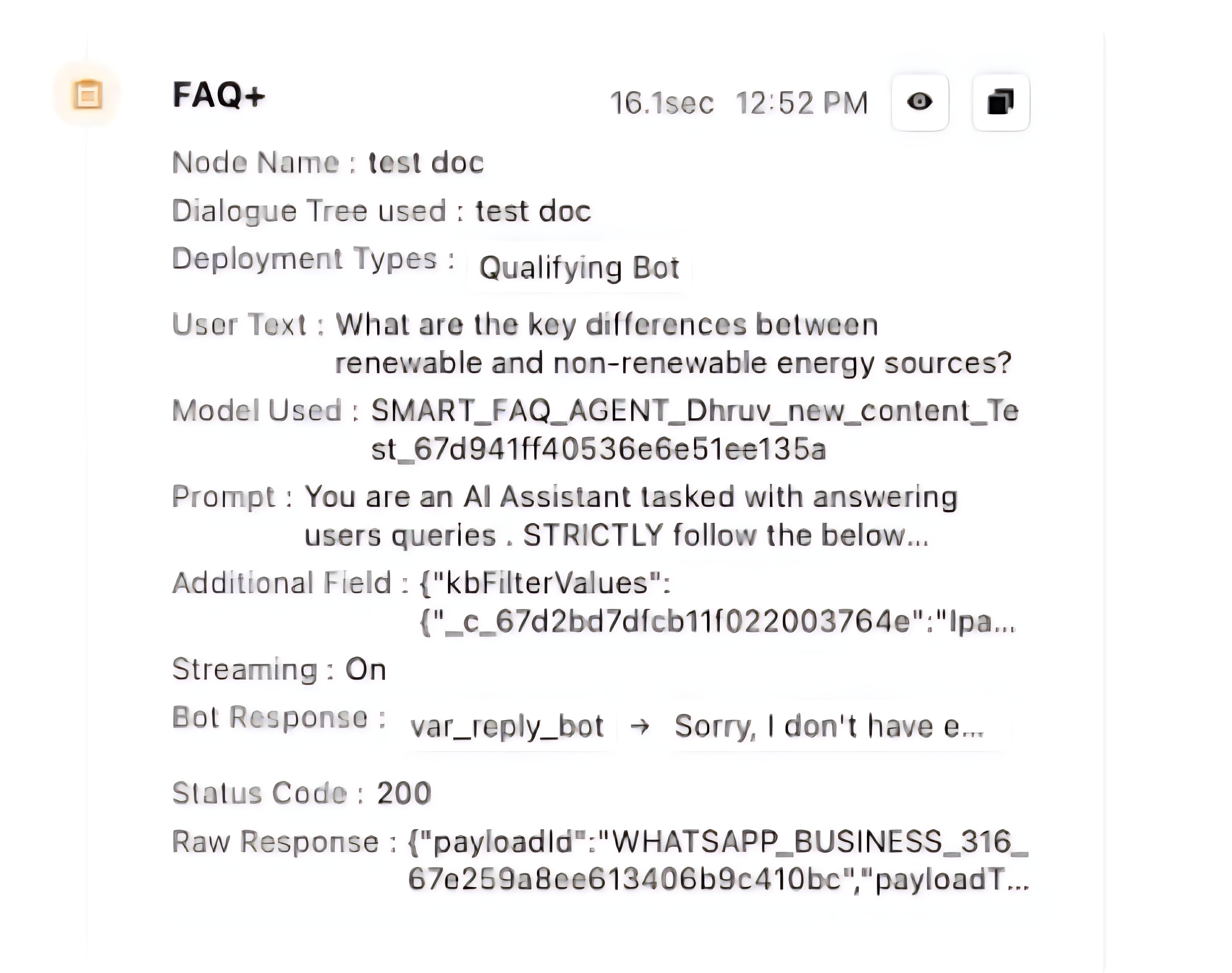

Navigate to the “Conversations” tab, and select the case that contains the message for which you want to view the API response.

In the debug log, navigate to the Smart FAQ node.

The Raw Response field contains the complete API response.

How to read this response?

Response

Field contains the “text“ or the actual response generated by the LLM to the user’s question.

{Root}.results[0].details.suggestions[0]Conversation:

Field contains the past conversation history, which has been passed as context to the LLM to help in answering the question.

{Root}.results[0].details.suggestions[0].addtional.convLogs:

Field contains the processing metadata.

{Root}.results[0].details.suggestions[0].addtional.logsSources:

Field contains the sources fetched, which were sent to the LLM, based on which the LLM has framed the response. Please note that the exact response is formed from a subset of these sources.

{Root}.results[0].details.suggestions[0].addtional.sourcesrawResponse:

Field contains the response generated by the LLM, along with the citations.

These citations refer to the exact sources (from the ones in the above field) from which the response was derived.

{Root}.results[0].details.suggestions[0].addtional.rawResponseProcessing Breakdown:

Field contains a breakdown of latency induced by various components in the pipeline, along with the Time to First Token (TTFT) and Time to Last Token (TTLT).

{Root}.results[0].details.suggestions[0].addtional.processing_breakdownContext:

Field contains the context, or exact chunks from the knowledge content, which were fetched. It was this data that was actually passed to the LLM to aid in generating a response.

{Root}.results[0].details.placeholders[1]Reworded Question:

Field contains the reworded question, based on which the search was performed on the provided knowledge content.

{Root}.results[0].details.placeholders[4]