Admin Panel: Advanced Controls

Updated

This article provides information on some advanced controls, such as Voice Bot Settings. Also, describes some of the advanced features of Sprinklr AI Agent.

Timeout Settings

Fill in the required fields on the page. Fields marked with a red dot are mandatory. Below are the descriptions of the fields on this page:

Enable Timeout Settings: Enable this switch to implement timeout settings for the AI Agent. It is enabled by default.

How long do you want to wait for a user reply?: Specify the duration, in seconds, minutes, hours, or more, that the AI Agent should wait a response from the user before timing out.

What reply should be sent after the timeout?:

Enable Conditions: Enable this switch to configure conditions based on which the response should be sent to the user. You can select from various options mentioned in the list.

Write Script to Publish Dynamic Asset:

Text Reply: Enter the response that should be sent to the user after timing out.

Choose Resource: Select any additional resource that should be embedded in the response that will be sent to the user after timeout.

Add Text Reply: Click this button to configure additional text replies with customized conditions.

Voice Bot Settings

For Voice Bot applications, configure the following:

Global Repeat Intent: Add an intent that, when detected, prompts the Sprinklr AI Agent to repeat its previous message.

TTS Fallback IVR: Choose a workflow to trigger if the text-to-speech (TTS) engine encounters downtime or failure.

Speech Profile: Select the desired speech profile for your voicebot.

Barge-in Functionality: Control whether users can interrupt a voice prompt with their response. You can also specify:

The minimum percentage of completion for the Sprinklr AI Agent's reply before accepting user input.

A minimum time duration for the Sprinklr AI Agent’s reply, after which user responses are allowed.

In all cases, the system will consider the highest value set for these parameters.

Note: If a specific local barge-in is configured for an Sprinklr AI Agent reply node, it will override the global barge-in settings.

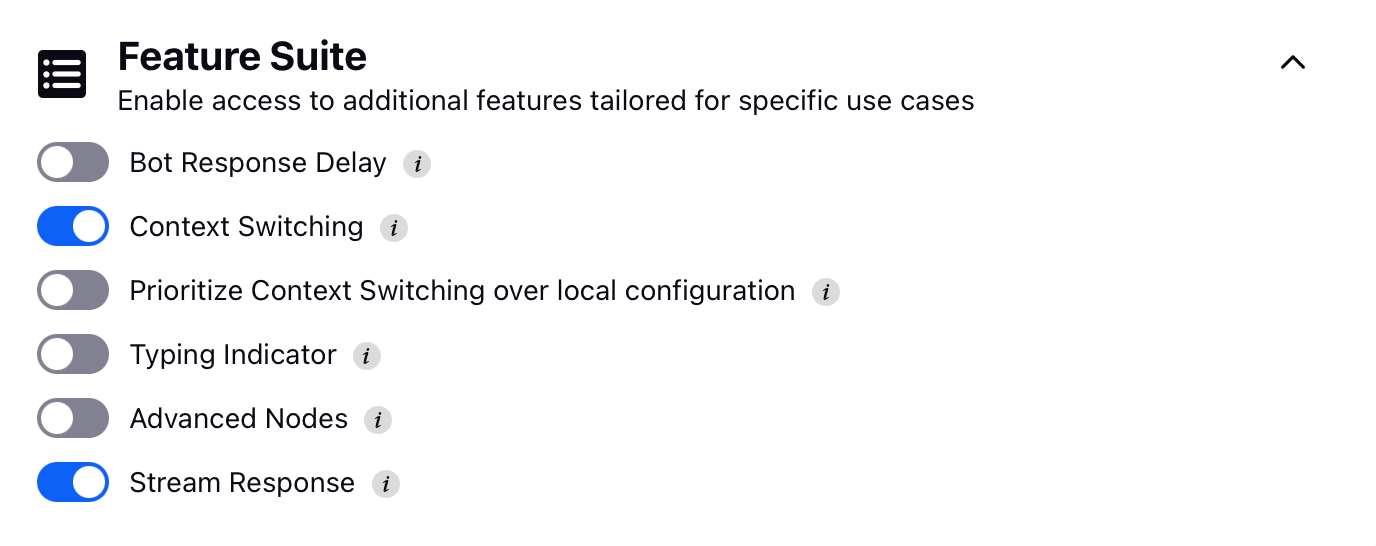

Feature Suite

You can enable access to additional features for specific use cases using the toggle options available under the Feature Suite, including:

Sprinklr AI Agent Response Delay: Introduce a 1–5 second delay in all Sprinklr AI Agent responses across dialogue trees to create a more natural, human-like conversational flow.

Simplified Context Switching: Activate this feature to allow seamless transitions between different dialogue trees when a new issue type is detected. For more details, refer to Simplified Context Switching.

Prioritize Context Switching Over Local Configuration: When enabled, this feature prioritizes context switching over local fallback settings within any dialogue tree. The recommended setting is OFF.

Typing Indicator: Displays a typing indicator to signal that the Sprinklr AI Agent is generating a response.

Advanced Nodes: Grants access to advanced nodes, enabling additional functionalities tailored to complex or specialized use cases.

Stream Response: When enabled, this toggle allows real-time streaming of responses from the LLM within the live chat module. This feature is currently supported only in live chat and applies to LLM-powered functionalities such as Dynamic Workflows and Smart FAQ in Sprinklr AI Agent.

Note: For Smart FAQ, if streaming is enabled:

The response will be published in real-time as it is generated (printed token by token, like in ChatGPT), bypassing output guardrails and post-processing for Generative AI responses.

Configuration of the Sprinklr AI Agent Response variable is not required, as the node itself publishes the response.