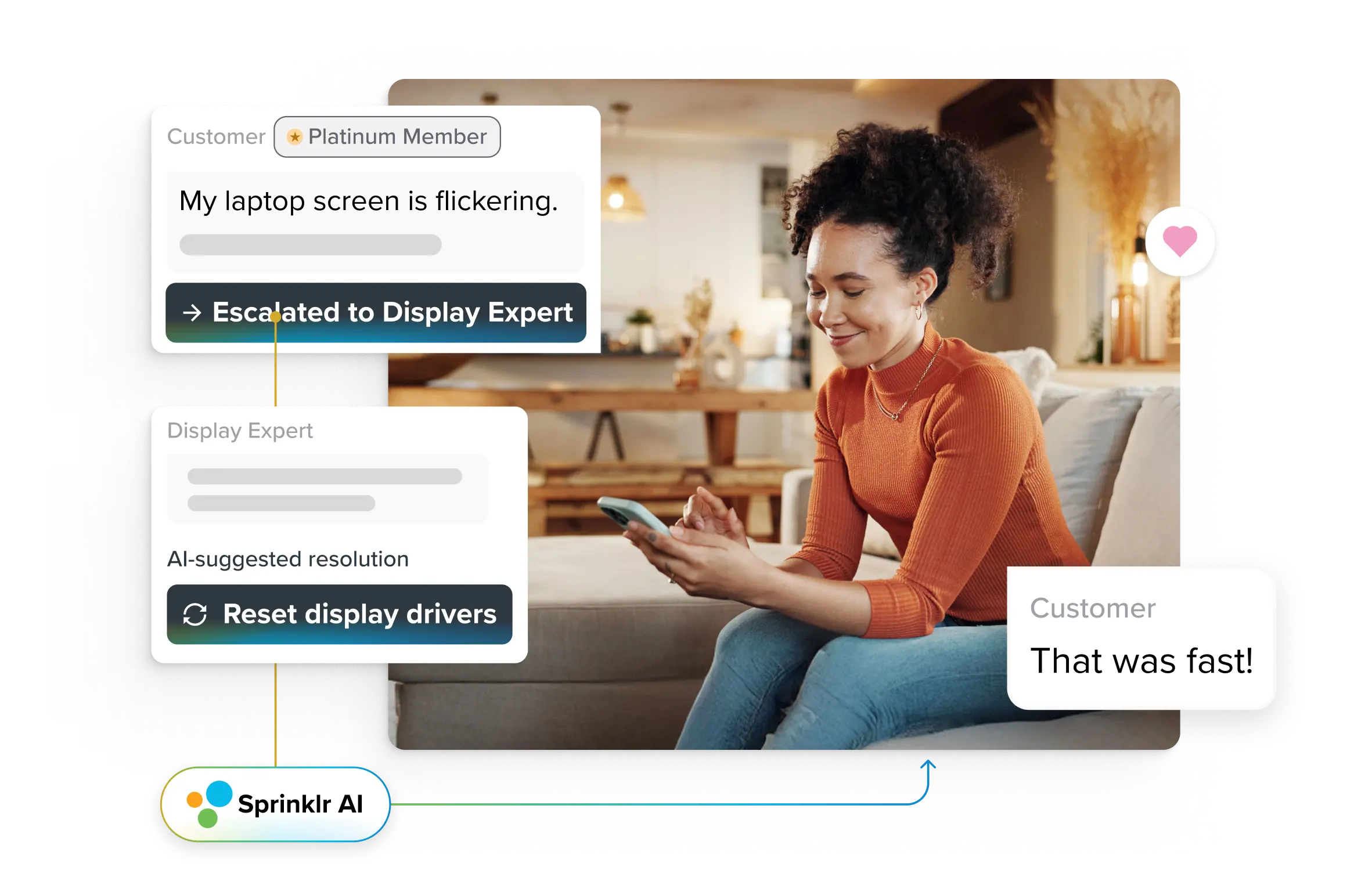

Transform CX with AI at the core of every interaction

Unify fragmented interactions across 30+ voice, social and digital channels with an AI-native customer experience platform. Deliver consistent, extraordinary brand experiences at scale.

Customer Service Challenges in the Age of AI

Companies adopting AI in customer service are beginning to realize that they must strike the right balance to avoid unexpected setbacks. AI has addressed several long-standing issues, including slow resolution speed, limited agent productivity, and burnout. Concurrently, it has also introduced a new set of customer service challenges that must be managed carefully.

A Gartner survey found that CX leaders are eager to deploy AI, but customers still worry about accessing a human agent when they need one. This reflects a shift in expectations. Customer service did not become easier with AI. It simply evolved, and businesses now need stronger strategies to address the next stage of complexity.

In this article, you will learn about the typical customer service problems emerging in the AI era and how to tackle them with practical, enterprise-ready approaches.

What is making customer service harder than ever?

The fundamental challenge has evolved. Historically, service leadership focused on optimizing human-led processes for efficiency (AHT, FCR). Today, AI automates those metrics in theory, but in practice, it introduces a new class of strategic vulnerabilities.

As Brad Fager, senior director analyst in the Gartner Customer Service and Support practice, notes, “AI and rapidly changing customer expectations are driving the evolution of the customer service function.” He adds that Agentic AI is moving customer service toward a more automated future, shifting traditional value models that relied on human-to-human interactions.

Three forces are driving this complexity:

1. Customers expect more because they already use AI every day

Customers are comfortable with AI assistants like Siri, Alexa, and generative AI tools. They expect support that’s not just fast, but context-aware and consistent across voice, chat, social, and in-app channels. When brands fail to meet that standard, customers see it as a failure to keep up with modern expectations and often shift to competitors.

2. Automation on the customer side creates new pressure

Following Brad Fager’s statements, Gartner notes another shift. As customers begin using third-party AI tools to automate their own interactions, service teams must adapt to support exchanges initiated by non-human systems, such as chatbots and AI agents.

This reduces opportunities to build emotional connections and lowers the quality of customer data, making it harder to understand real needs and improve experiences.

3. Empathy remains the most challenging part to automate

Speed matters but customer empathy ultimately determines how customers perceive the brand. Another Gartner poll of 163 customer service leaders in 2025 found that 95% plan to retain human agents to define AI’s role and anchor service in a digital-first but not digital-only model.

Leaders recognize that a fully agentless approach can damage trust when customers want assurance from a real person.

One notable example in early 2025: An AI support bot from the startup Anysphere, named Sam, incorrectly attributed a widespread login issue to a non-existent new policy. The hallucinated response led to confusion and subscription cancellations. The company’s co-founder acknowledged the error publicly, illustrating how customer service automation without safeguards can damage trust.

Related Read: Display Empathy in Customer Service in 5 Steps

5 key customer service challenges in the AI era (and how to overcome them)

AI changes where pain points show up. If leaders treat automation as a silver bullet, they’ll compound existing service issues. But if they design CX strategy around specific failure modes, they can reduce risk and amplify impact.

1. AI accuracy and accountability failures

Inaccuracy, including hallucinations and incorrect answers, remains the most common risk experienced by organizations using AI. In its 2025 Global AI Survey, McKinsey reported that 51% of organizations using AI have experienced at least one negative consequence from its deployment.

When AI speaks with brand authority, mistakes become brand liability. A government chatbot in New York City once provided confidently wrong regulatory advice, highlighting the accountability gap when automation operates without strong governance.

How to address it:

- Create a cross-functional AI governance board with legal, risk, product, and customer service leaders to vet high-impact automation.

- Deploy real-time output monitoring to detect spikes in escalations or conflicting answers.

- Require clear, human-readable explanations for automated actions in sensitive workflows.

💡Pro Tip: Don’t rush to deploy chatbots simply because they run on the latest model. Prioritize compliance, governance, and vendor transparency.

Work only with reputable AI solutions providers like Sprinklr who include policy guardrails, audit trails and clear fallback paths so that every response can be traced, corrected and owned when something goes wrong.

2. Channel fragmentation and context loss

Switching between channels (email, chat, voice, social) can strip context and force agents to rebuild the story manually. Research in Harvard Business Review (HBR) shows that agents spend about 9% of their annual time simply reorienting themselves after switching contexts. This constant toggling can reduce functional IQ by approximately 10 points during tasks and increase error rates by around 25%, as agents often become entangled in the software instead of resolving the issue.

A notable example is Telefónica Hispanoamérica. It consolidated its fragmented contact center platforms into a unified, AI-enabled CCaaS solution that preserved interaction history and improved routing. The result: consistent context across channels, better compliance oversight, and higher operational efficiency.

How to address it:

- Invest in a unified, AI-driven omnichannel platform that maintains history and context across touchpoints.

- Use dynamic routing that matches customer intent to the right agent based on past interactions and skill sets.

💡Pro Tip: Invest in a unified, AI-powered omnichannel routing platform rather than point tools for each channel. A single system should preserve context, carry the whole history into every interaction, and hand off complete information to agents at any touchpoint, including social and messaging.

Modern platforms even utilize AI to dynamically assign cases to the best-suited agent based on past interactions, skills, CSAT scores and availability, thereby enhancing both customer experience and business outcomes with each handoff.

3. Data infrastructure gaps that break automation

AI only performs as well as the data it consumes. When customer data is siloed across legacy systems, ungoverned spreadsheets, and disconnected CRMs, automation fails, or worse, delivers inconsistent or insecure outcomes.

The risk isn’t hypothetical. In a Samsung’s 2023 incident, engineers accidentally leaked proprietary code into an external model due to a lack of governance — a cautionary lesson for customer data workflows as well.

How to address it:

- Implement enterprise-grade data loss prevention and secure AI gateways.

- Consolidate critical CX data into a unified, governed layer before enabling automation.

- Create secure sandboxes for experimentation to reduce the use of public tools with sensitive data.

4. Declining agent capability from over-reliance on automation

AI that absorbs every routine task can quietly weaken your human team. A 2025 study highlights a pattern of “cognitive and emotional de-skilling.”

Junior agents often skip simple cases and are pushed straight into complex issues without developing the necessary fundamentals. When automation fails, human performance slows by 17.7% because agents no longer have the expertise or confidence to resolve problems manually.

One case of UnitedHealth shows a predictive model in healthcare skewing decisions and pressuring human reviewers to align with flawed outputs — a cautionary tale about over-trusting automation.

How to address it:

- Use AI as an assistive co-pilot, not a replacement for agent judgment.

- Provide tools that summarize context, suggest responses, and prefill data while leaving nuanced decisions to humans.

- Build learning flows where agents receive feedback and coaching informed by AI.

💡 Pro Tip: To augment and not replace human capability, invest in agent copilot solutions that handle repetitive actions in customer service.

These tools can summarize past interactions, draft brand-compliant responses, fill case dispositions, and update CRM records, while leaving complex judgment calls with humans. Keep humans in the loop on critical decisions so that skills grow alongside automation, rather than fading when the system takes over.

5. Erosion of trust when automation has no empathy

Even when AI is technically correct, it can still fail if it comes across as cold, evasive or unhelpful. A 2025 study found that 56% of professionals view “AI with no empathy” as the biggest obstacle to successful human-AI integration in support. This concern outranks issues like misrouted queries or slow human backup.

Taco Bell’s experience in August 2025 shows how this plays out in public. The brand’s AI ordering system became the target of viral videos in which customers abused it, including a now-famous clip of someone ordering 18,000 cups of water solely to force a handoff to a human.

The bot felt like a wall instead of a helper, with no clear way to say “I need a person.” Customers turned to absurd hacks, mocking the system and the brand. Trust in both the AI and the company took a hit.

How to address it:

- Build explicit, easy human “escape hatches” into every automated flow.

- Tune AI systems to notice frustration signals and escalate early.

- Track resolution sentiment and error acknowledgement as part of your AI performance metrics.

These are the key challenges enterprises face, even in an era of AI-powered customer service. But what happens when these challenges are not tackled the way we discussed? Let’s understand.

Discover More: AI in Customer Service: Use Cases, Roadmap & Strategies

Impact of AI-driven challenges on customer experience

The impact of AI-driven service challenges will differ by enterprise, depending on size, channel mix, and customer expectations. Still, a few core metrics suffer across most organizations.

CX metric | How AI-driven challenges affect it | How to control the impact |

Hallucinated responses, cold bots and dead-end flows quickly drop satisfaction scores. For example, a policy error from a chatbot during a refund request can turn a loyal customer into a detractor after a single bad interaction. | Route high-risk intents (such as billing disputes, security, health and outages) to human or human-in-the-loop flows. Track CSAT by intent and by bot vs. human and set guardrails where AI is not allowed to lead without human review. | |

Poor context, shallow integrations, or inaccurate AI responses lead to repeat contacts. A customer who explains their issue to a bot and then repeats everything to an agent counts as having multiple touches for a problem that should have been resolved once. | Utilize Agentic AI to maintain a complete context across channels and handoffs. Measure FCR at the journey level, not per channel, and review failed first contacts to identify where AI lacked data, tools or authority to resolve. | |

When AI fails mid-flow, agents receive half-resolved cases that take longer to untangle. For example, an agent may spend extra minutes correcting a bot's incorrect actions before addressing the original issue. | Deploy AI as a copilot that prepares context, suggests responses and pre-fills forms, while limiting fully autonomous actions to well-tested use cases. Monitor AHT by “AI touched” vs. “no AI” interactions and adjust automation scope accordingly. | |

Containment and escalation quality | Over-aggressive containment targets keep customers locked in unhelpful bot loops. They escalate only after customer frustration peaks, which increases effort and harms customer loyalty, even if the final resolution is correct. | Set dual targets for containment and customer effort. Build clear triggers for early escalation based on sentiment, repeated intents or failed attempts, so the system hands off to humans before damage is done. |

Compliance and risk incidents | Inaccurate AI guidance on eligibility, refunds or regulations can create chargebacks, regulatory complaints or legal exposure. A single wrong response on a sensitive topic can cost far more than the saved handle time. | Involve legal, risk and compliance teams in AI design from the start. Safelist which policies AI can apply directly, keep sensitive decisions “recommend only,” and review AI output regularly against audit samples. |

Agent performance and turnover | Over-reliance on automation can deskill agents and push them into cleanup work. They handle only escalations and angry customers, which leads to burnout and higher attrition, even if headline productivity appears to improve. | Use AI to remove low-value tasks, not all simple cases. Keep a structured mix of case types for agents, invest in coaching using AI-generated summaries and track engagement and turnover by queue to detect unhealthy patterns early. |

Move beyond ordinary AI challenges and unlock workflow-led growth

The hurdles above are real, and the strategies to address them are essential. But the next stage of enterprise customer service isn’t just about fixing today’s problems — it’s about designing workflows where AI and humans complement each other.

Sprinklr’s AI agents are built to be customized from the ground up for your enterprise. They operate with a compliance-first and data-security-first architecture, and they can automate the resolution of complex service workflows while staying aligned with your brand’s rules, processes and tone. At the same time, they include proper guardrails to keep humans in the loop wherever oversight or judgment is needed.

How could this work for your enterprise? Let our specialists walk you through a personalized, free demo. ⬇️

Frequently Asked Questions

The biggest challenge is keeping accuracy and trust while scaling automation. AI can answer faster, but a single incorrect or careless response regarding refunds, security or policy can damage loyalty and create risk. Leaders must strike a balance between automation and robust guardrails, human oversight, and clear ownership for every AI decision.

Most problems come from broken workflows, not just harmful agents or tools. Fragmented systems, missing context between channels, unclear policies, and poor data quality force customers to repeat themselves and require agents to make assumptions. AI on top of this mess only amplifies the chaos unless the underlying processes and data are cleaned up.

No. Automation removes repetitive work and speeds up simple tasks, but it does not eliminate the need for judgment, empathy or accountability. If you automate a broken process, you just make the problem hit more customers, faster. The goal is to automate well-designed workflows and keep humans focused on exceptions, edge cases, and emotional situations.

Leaders should start where business impact and feasibility intersect. The best early candidates are high‑volume, clearly defined use cases supported by reliable data, such as password resets, simple status queries, or standard order changes. Fix the process first, then automate it. More complex or high‑risk scenarios should follow in later phases, once governance frameworks, data quality, and human‑in‑the‑loop models are firmly in place.

Yes, when used correctly. AI‑driven analytics can analyze tickets, chats, calls, and social conversations to identify recurring issues, early churn signals, and friction points across customer journeys. However, prevention only happens when these insights lead to concrete changes in product design, policies, and workflows. Leaders must also track whether key outcomes, such as repeat contacts, complaint volume, and time to resolution, actually improve as a result.