The strategic AI-native platform for customer experience management

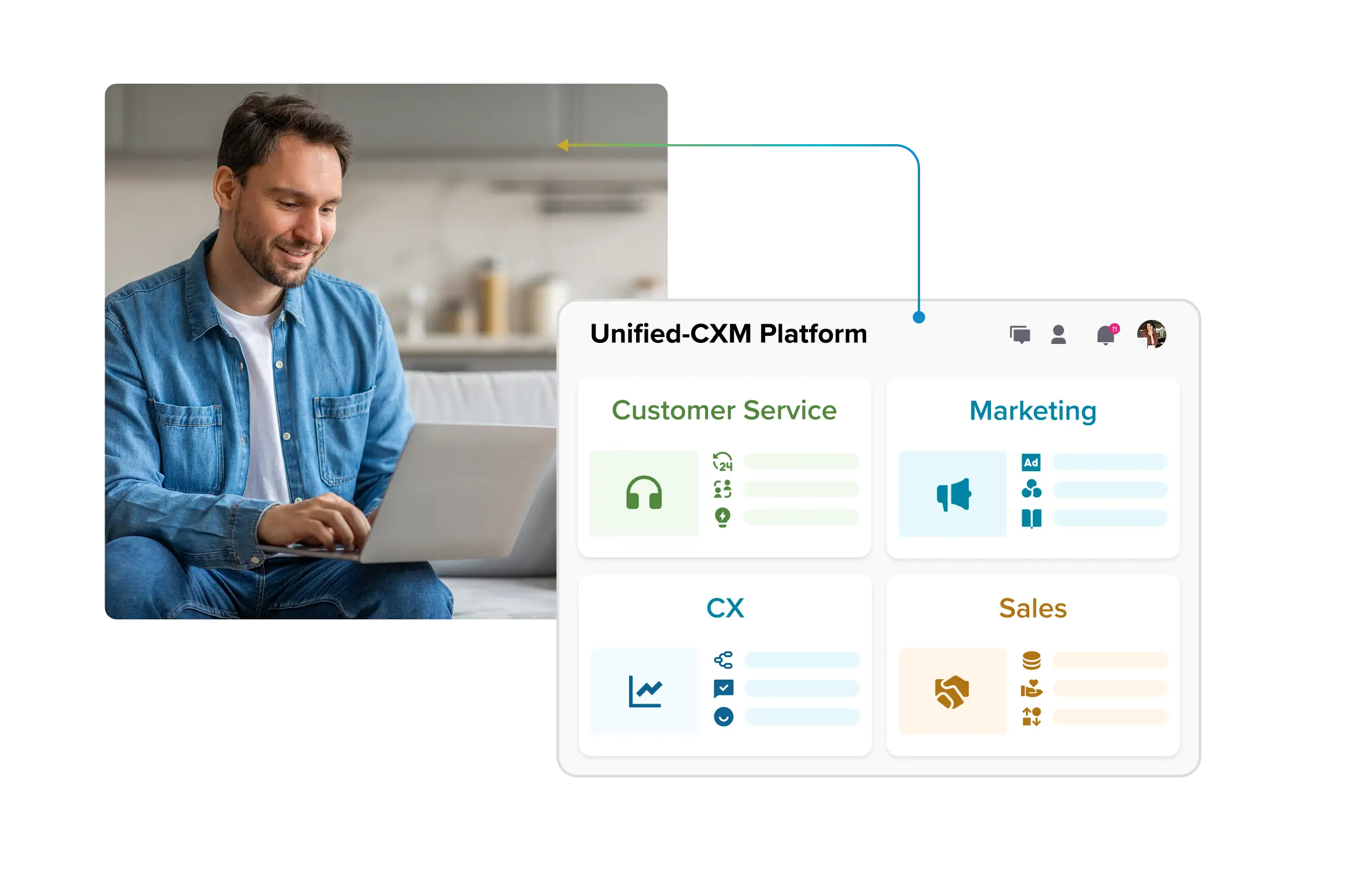

The strategic AI-native platform for customer experience management Unify your customer-facing functions — from marketing and sales to customer experience and service — on a customizable, scalable, and fully extensible AI-native platform.

The Future of Enterprise AI is Model-Dynamic and Open

What’s needed is a fundamental architectural shift: from static, LLM-centric infrastructure to a dynamic, modular and governed environment built specifically for agent-based intelligence.

– Mckinsey

The first wave of generative AI brought excitement (and fragmentation). Enterprises rushed to integrate large language models (LLMs) into their workflows, bolting on orchestration layers, memory stores and connectors.

But the early architecture of AI adoption is hitting its limits.

Point solutions, isolated pilots, siloed experiments preclude enterprise-grade scale, trust and agility and this results in the ever-expanding accumulation of technical debt.

The way enterprises adopt AI today will determine whether it becomes a force multiplier or a ceiling to their potential.

But most organizations face the same dilemma:

- Locking into a single AI model or vendor risks obsolescence as innovation accelerates.

- Piecemeal AI deployments create silos, fragmentation and governance challenges.

Enter the era of model-dynamic, open AI (not OpenAI) platforms.

An open platform approach to AI ensures organizations adapt, evolve and thrive in an unpredictable AI landscape where vendors come and go and new models appear and disappear in the blink of an eye.

Why an open AI platform is an enterprise advantage

The AI ecosystem is innovating faster than any individual provider. New LLMs emerge monthly. Domain-specific AI is outpacing general-purpose models in precision. And agentic AI is opening new frontiers in automation and decision-making.

In a world where the half-life of an AI model is a few months, built-in adaptability is the only reasonable strategy. An open AI platform ensures:

- You get the most value from your AI. You gain access to industry-leading AI models along with your own custom models.

- Your teams don’t get trapped in a black box. You can evaluate, select and orchestrate the right model for the right job.

- Innovation flows in both directions. Your enterprise can take advantage of the next breakthrough, rather than being bound to the last one.

Sprinklr has an open platform architecture where we enable our customers to safely work with any model, including Sprinklr’s in-house LLMs or even their own (BYOM) while giving them full control over providers and configurations.

What is model-dynamic?

At Sprinklr, we have always shied away from using the term “LLM-agnostic.” Yes, we support all major AI providers including Amazon Bedrock, Gemini, OpenAI, Azure OpenAI alongside our in‑house LLMs built on Mistral, Qwen, Falcon, Llama and other open‑source frameworks. But “LLM‑agnostic” suggests that models are fungible and interchangeable, as if swapping GPT‑4o for Gemini 2.5 Pro would produce the same outputs.

That, however, is not the reality.

At Sprinklr, we orchestrate AI, i.e., a single use case can be powered by multiple models and providers, with each task executed by the best-fit model for that specific function.

For example, a standard Product Insights workflow uses multiple AI types, providers and models:

Sprinklr prescribes, but we never restrict. We offer a recommended path, but we also expose our AI orchestration and configuration layer, allowing enterprises that have the expertise to take the wheel and customize AI to their needs within the Sprinklr platform.

Transforming AI from a black box into a transparent system

At Sprinklr, we give enterprises full visibility and control over how they orchestrate and manage GenAI without forcing them into a rigid workflow. YYou get Sprinklr recommended defaults to accelerate time‑to‑value, but every layer is open for customization.

1. Choose models and providers: Select the models and providers that best fit your enterprise. Prefer Gemini or Azure OpenAI exclusively? Want to combine them with Sprinklr’s in‑house LLMs? You have complete flexibility.

2. Own your prompt engineering: We expose the full prompt layer so you can select models per prompt, edit instructions, add brand‑specific examples and even fine‑tune hyperparameters like temperature, top‑p and top‑k to match your output style and risk tolerance.

3. Orchestrate scalable AI workflows: Build multi‑step AI pipelines that scale across countries, languages, brands and business units. Orchestrate multiple models in a single workflow, with each task routed to the best‑fit AI for that specific job.

4. Fine tuning, BYOK and BYOM: Enterprises can fine‑tune models directly within Sprinklr, bring their own keys (BYOK) to consume existing vendor credits or bring their own models (BYOM).

5. Guardrails and compliance: Protect your enterprise with AI‑powered guardrails– detect harmful or toxic content, enforce prompt safety and automatically mask PII through keyword‑based or AI‑based detection.

6. Auditability and transparent governance: Maintain full trust in your AI with usage logs, feedback tracking and monitoring dashboards. Audit decisions, explain outputs and govern every model with confidence.

Winning with AI

Enterprises that thrive in this new era will:

- Treat AI as a system, not a feature

- Orchestrate multiple AI types seamlessly, avoiding dependency on any single model

- Invest in open, adaptable platforms that evolve with the market

- Build governance into the core of their AI strategy

At Sprinklr, this philosophy guides everything we build. And as new models emerge, whether it’s GPT-OSS or GPT-5, our customers will always be future-ready.