The strategic AI-native platform for customer experience management

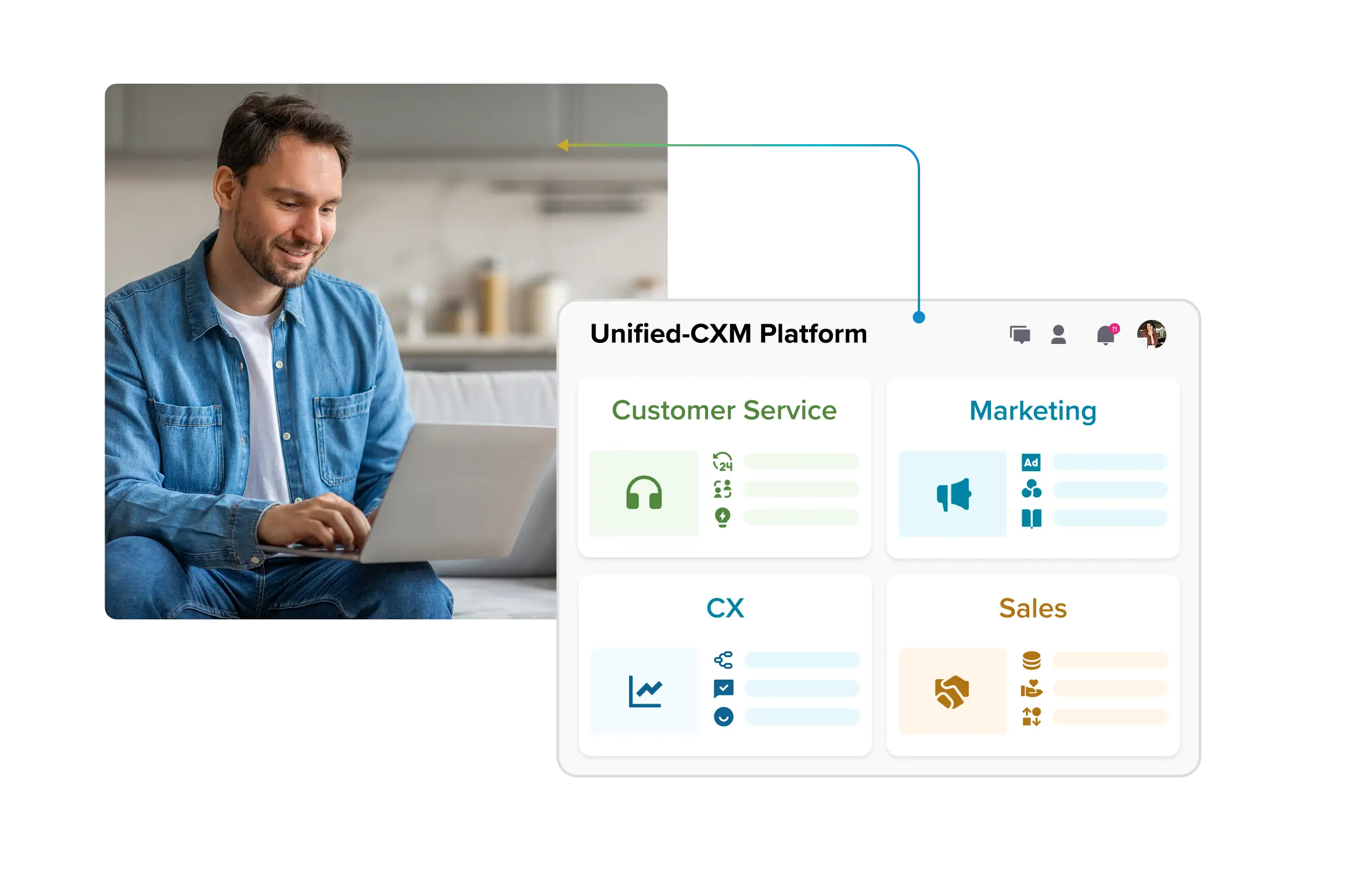

The strategic AI-native platform for customer experience management Unify your customer-facing functions — from marketing and sales to customer experience and service — on a customizable, scalable, and fully extensible AI-native platform.

The Hidden Costs of AI Agent Fragmentation in Customer Service (+How to Avoid Them)

Enterprise customer service organizations are facing a complex challenge of managing multiple AI models across different use cases without a unified strategy. Teams typically start with OpenAI for general customer queries, then find themselves needing different models for specialized tasks — each requiring separate integrations, security reviews, and operational overhead.

A fragmented approach like this creates mounting costs that extend far beyond licensing fees. When organizations want to optimize for price, test new capabilities, or adopt emerging protocols like Model Context Protocol, they discover that their business logic, APIs, and integrations are tightly coupled to specific vendors.

Enterprise buyers poured $4.6 billion into generative AI applications in 2024, an almost 8x increase from $600 million in 2023, but much of this investment is scattered across disconnected tools and platforms.

Sounds relatable? You’re not alone; countless customer service organizations worldwide are struggling with the same challenge. Teams are deploying multiple AI models across different use cases with zero coordination, creating a complex web of integrations, duplicate security reviews, and mounting operational costs.

The result isn't just inefficiency—it's a hidden cost center that's growing with every new AI deployment.

The true cost of model fragmentation in customer service

Organizations typically deploy three or more foundation models in their AI stacks, routing to different models depending on the use case, and this number is growing rapidly. McKinsey research shows that 65% of organizations are now regularly using generative AI, nearly double the percentage from just ten months ago. While this multi-model approach offers flexibility, it comes with substantial hidden costs that enterprise leaders are only beginning to understand.

1. The integration tax

Every new model means new APIs, custom middleware, and bespoke integrations. Your customer service platform might connect to a vendor which uses OpenAI for chat responses, subsequently requiring another vendor to use models like Gemini and Bedrock. Each integration requires development time, testing, and ongoing maintenance. When you want to experiment with a new model or switch providers for cost optimization, you're looking at weeks of integration work, additional costs or at the very least, administrative overhead.

2. Security and compliance overhead

Each AI model vendor requires separate security reviews, compliance audits, and risk assessments. For regulated industries, this means multiple vendor assessments, separate data processing agreements, and distinct privacy impact evaluations. Gartner forecasts global security and risk management spending to reach $215 billion in 2024, driven in part by the "rapid emergence and use of generative AI" requiring enhanced security and risk management spending.

From talking with multiple customers across industries, we found out that they had to conduct separate security reviews for each AI provider. With three different models in production, that meant three different compliance processes, three separate vendor risk assessments, and different sets of data handling procedures. The overhead becomes a full-time job for the compliance team.

3. The debugging nightmare

When something goes wrong — and it will — scattered logging and monitoring across multiple platforms turns troubleshooting into a multi-day investigation. Your OpenAI-powered chatbot logs are in one system, your agent assist tool interactions are tracked separately, and your custom customer service integrations have their own monitoring setup.

This fragmentation becomes particularly painful during customer escalations. Your support team can't quickly trace a conversation flow when it spans multiple AI systems, from initial chatbot interaction to agent assist recommendations, leading to longer resolution times and frustrated customers.

4. Vendor lock-in and business logic coupling

Perhaps the most insidious cost is invisible. When business logic becomes tightly coupled to specific model APIs. Your escalation rules, response formatting, and integration patterns become model-specific, creating switching costs that grow over time.

One enterprise AI leader noted: "We realized our entire customer service workflow was built around OpenAI's specific response format. When we wanted to test a different model to improve accuracy, we discovered that switching would require rebuilding not just the integration, but our entire response processing pipeline."

McKinsey research confirms this challenge. Even high-performing organizations struggle with "developing processes for data governance and developing the ability to quickly integrate data into AI models," highlighting the integration complexity that locks organizations into specific platforms.

The framework for model-agnostic customer service

Smart organizations are building their customer service AI infrastructure around four critical pillars that eliminate fragmentation costs while maintaining flexibility:

1. Unified security layer

Instead of managing separate security policies for each model, implement model-agnostic guardrails and data protection:

- Input and Output Guardrails: Security controls that work regardless of the underlying LLM, protecting against prompt injection, data leakage, and inappropriate responses

- Centralized Masking: PII and sensitive data protection that doesn't need reconfiguration for each model

- Single Compliance Audit: One security review covers all model interactions, dramatically reducing compliance overhead

2. Model-agnostic business logic

The key insight is separating your business logic from model-specific implementations:

- Unified API Layer: All models accessed through a consistent interface

- Provider-Independent Workflows: Escalation rules, response formatting, and integration patterns that survive model changes

- Flexible Routing: Ability to route different query types to optimal models without rebuilding integrations

3. Centralized logging and monitoring

Unified observability across all AI interactions:

- Single Dashboard: All model interactions, costs, and performance metrics in one place

- Consistent Metrics: Standardized measurement across different providers

- End-to-End Tracing: Complete conversation flow visibility, regardless of which models were involved

4. Reliable escalation orchestration

Escalation mechanisms that don't depend entirely on LLM reliability:

- Keyword-Based Triggers: Fallback escalation rules based on conversation patterns

- Sentiment Thresholds: Automatic escalation when customer frustration is detected

- Time-Based Rules: Escalation triggers based on conversation length or resolution time

- Model-Independent Logic: Escalation decisions that work consistently across different AI providers

Real-world impact: What mature customers demand

Enterprise customers implementing AI in customer service consistently request two things that directly address fragmentation concerns:

Visibility and control: Mature customers have robust UAT processes and need to understand exactly how responses are generated. They want detailed logging, audit trails, and the ability to trace decision-making across their AI systems. This becomes impossible with fragmented, vendor-specific tools. Gartner research on AI governance emphasizes that organizations should constantly review governance, monitoring, testing and compliance frameworks in a rapidly evolving AI landscape.

Industry-specific guarantees: Depending on the industry, customers require specific compliance guarantees and risk controls. Financial services need different safeguards than healthcare or retail. A unified platform allows for consistent policy enforcement across all AI interactions, regardless of the underlying model.

Moving beyond fragmentation: The business case

When moving to a new LLM, organizations most commonly cite security and safety considerations (46%), price (44%), performance (42%), and expanded capabilities (41%) as motivations. A model-agnostic approach addresses all these concerns simultaneously.

Despite significant AI investments, McKinsey findings show that "more than 80 percent of respondents say their organizations aren't seeing a tangible impact on enterprise-level EBIT from their use of gen AI." This ROI challenge is often rooted in fragmented implementations that prevent organizations from capturing AI's full value.

Cost optimization: Switch models based on cost-effectiveness without integration overhead. Test new providers for specific use cases without rebuilding your entire stack.

Risk reduction: Single security review, unified compliance posture, and consistent monitoring across all AI interactions.

Innovation acceleration: Adopt new capabilities like Model Context Protocol or advanced reasoning models without waiting for vendor-specific implementations.

Operational efficiency: One team, one dashboard, one set of processes—regardless of how many AI models power your customer service.

Conclusion: Building for the future

The question isn't whether your customer service organization will use multiple AI models — 37% of respondents are now using 5 or more models as opposed to 29% last year, and this trend is accelerating. The question is whether you'll manage this complexity strategically or let it evolve into expensive sprawl.

Organizations that build model-agnostic customer service platforms today will have a significant competitive advantage. They'll be able to optimize costs by switching models for specific use cases, adopt new capabilities faster, and maintain consistent security and compliance postures, all while avoiding the integration tax that's quietly draining AI budgets across the enterprise.

The future of customer service AI isn't about choosing the right model; it's about building the right architecture to leverage all models effectively. Those who recognize this shift early will capture the full value of AI while avoiding the hidden costs that come with fragmentation.

To discuss your business challenges and use cases in detail with Sprinklr experts, book a demo with us today.