Configure Guardrails in AI+ Studio

Updated

Guardrails in AI+ Studio help you enforce responsible AI usage by restricting the generation or processing of harmful, inappropriate, or policy-violating content. You can configure a Guardrail to monitor and restrict specific types of content generated or submitted through generative AI models.

This configuration guide walks you through the step-by-step process of creating and customizing Guardrails, including setting up basic details, selecting harmful content categories, and defining word filters.

Prerequisites

Before configuring Guardrails in AI+ Studio, ensure that the following permissions and features are enabled in your Sprinklr environment:

AI+ Studio must be enabled in your Sprinklr instance.

You must have the following permissions based on your role:

View permission for AI+ Studio and Guardrails.

Edit permission for AI+ Studio and Guardrails.

Delete permission for Guardrails (optional, if deletion is required)

The following table outlines how each permission affects your access to Guardrails:

Permission | Yes (Granted) | No (Not Granted) |

View |

|

|

Edit |

|

•Add Guardrail CTA is not visible. |

Delete |

|

|

Access Guardrails

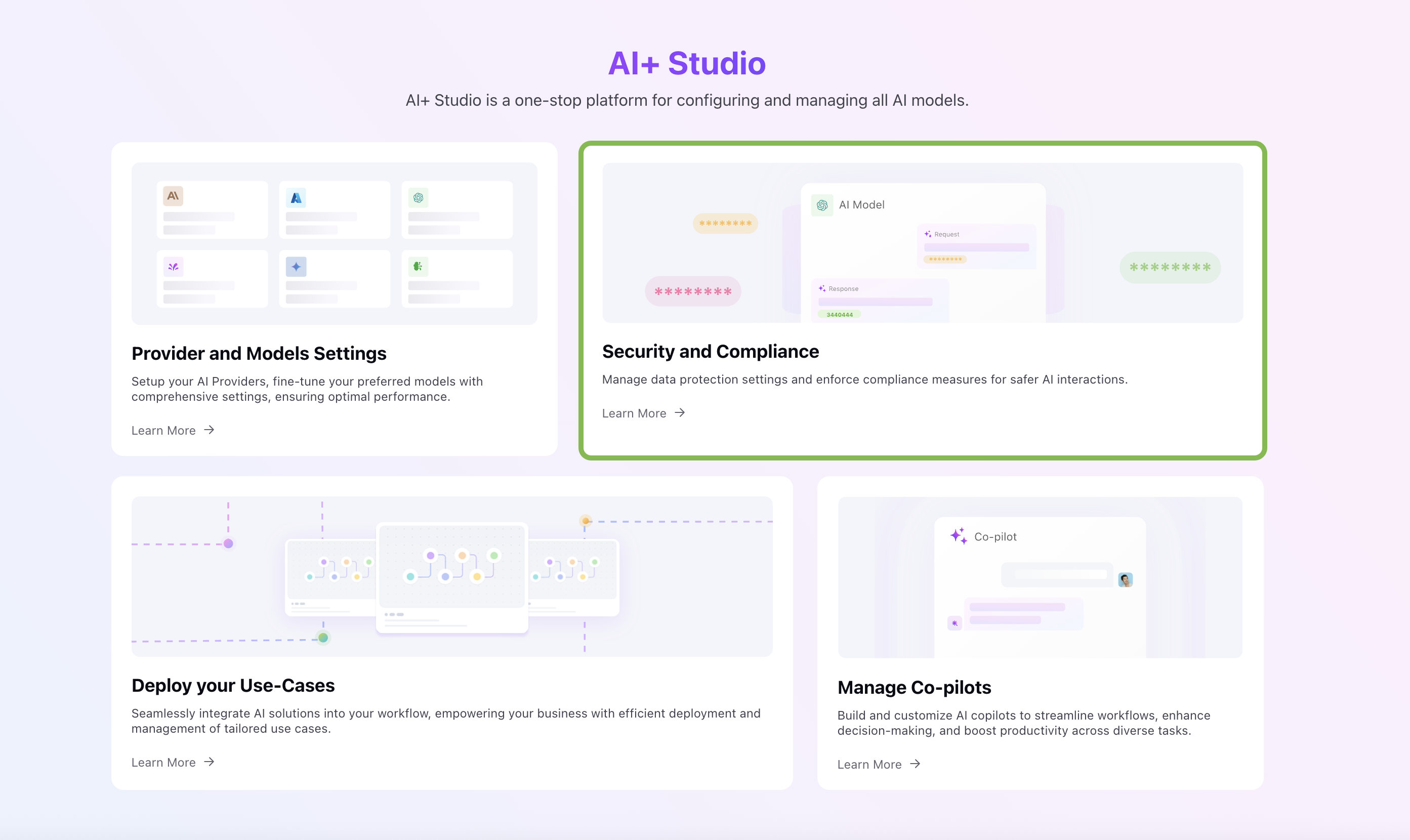

Navigate to AI+ Studio from Sprinklr launchpad. Select the Security and Compliance card from AI+ Studio dashboard.

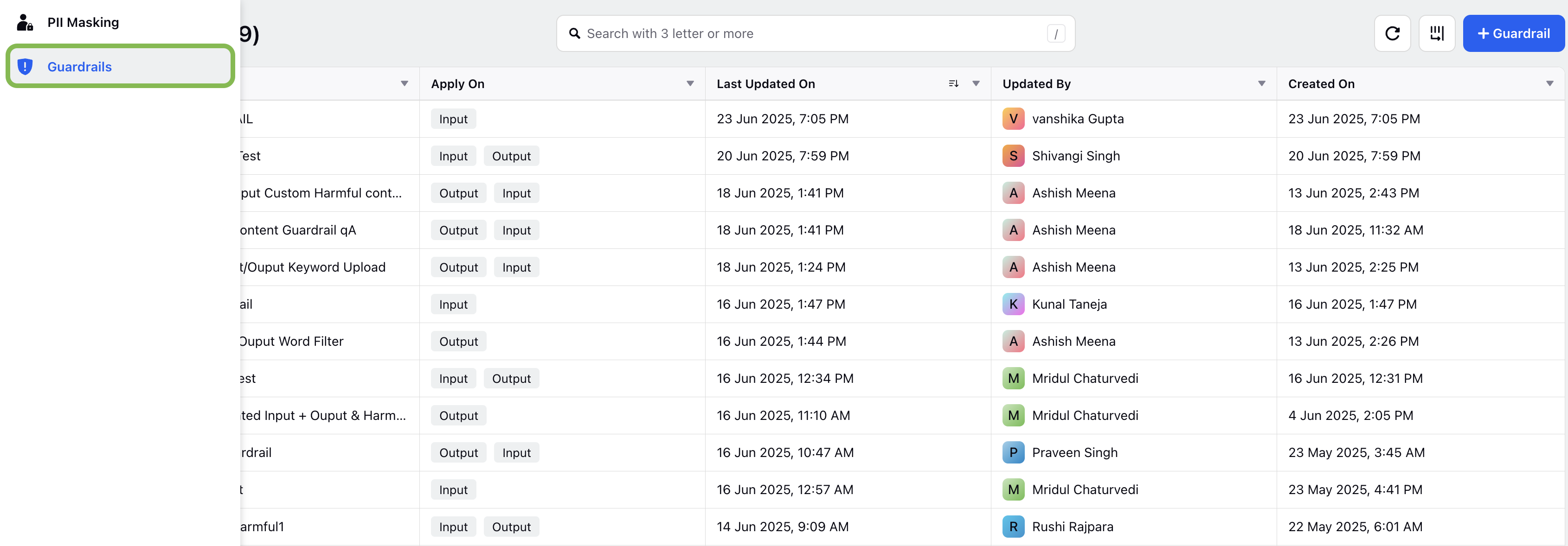

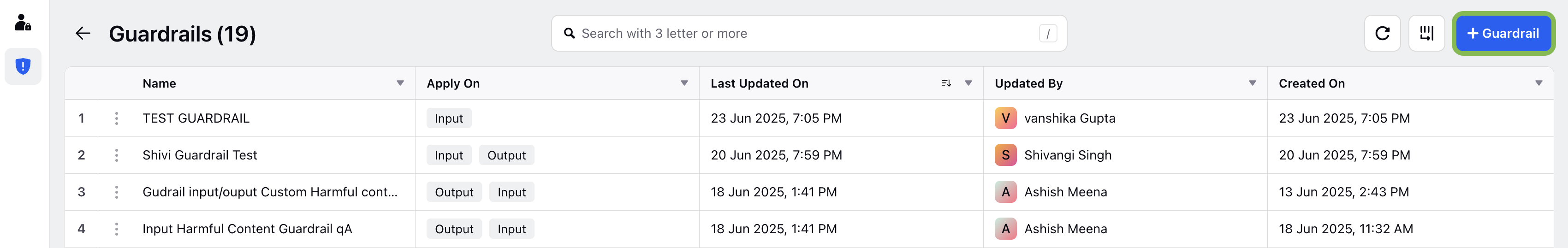

In the left navigation pane, select Guardrails. You will be redirected to Guardrails record manager, where you can access all the Guardrails configured in your environment.

You can Edit or Delete existing Guardrails from the record manager screen by selecting the vertical ellipsis (⋮) button next to the Guardrail entry.

Create a Guardrail in AI+ Studio

On the Guardrail record manager screen, click the ‘+ Guardrail’ button to create a new Guardrail.

You will be redirected to Select Guardrail window, choose the type of Guardrail from the dropdown list.

Click the Next button. You will be redirected to the configuration steps.

Supported Guardrails

The following Guardrails are supported in AI+ Studio. Refer to their respective guide for detailed configuration steps.

Guardrail Type | Description |

Denied Topics | The Denied Topics Guardrail allows you to restrict the use of specific topics within the system. When you configure this guardrail, the platform actively monitors user interactions for any reference to the denied topics and blocks them from being processed. For more details, refer to Denied Topic Guardrail. |

Harmful Content | The Harmful Content Guardrail helps prevent the system from generating or engaging with unsafe, abusive, or violent material. This configuration is designed to maintain a safe environment for users and ensure compliance with organizational and regulatory standards. For more details, refer to Harmful Content Guardrail. |

Word Filters | The Word Filters Guardrail allows you to block specific words or phrases from appearing in user interactions. This configuration is useful for restricting profanity, sensitive terms, or any language that violates organizational policies. For more details, refer to Word Filters Guardrail. |

Prompt Guard (Standard Guardrail) | The Prompt Guard Guardrail acts as a protective layer that evaluates user inputs before they reach the model. Its purpose is to detect unsafe or malicious behavior and prevent the system from being manipulated. For more details, refer to Prompt Guard Guardrail. |

Protected Material (Standard Guardrail) | The Protected Material guardrail identifies content in model outputs that may contain copyrighted, trademarked, or otherwise legally protected material. This guardrail helps ensure that generated content complies with intellectual property and legal requirements. For more details, refer to Protected Material Guardrail. |

Note: Standard Guardrails cannot be created or deleted but you can edit its configuration as needed.

Use Guardrail in Deployment

You can apply Guardrails within the Prompt Node of a pipeline in AI+ Studio to restrict the generation of harmful content. Follow the steps below to add Guardrails to a deployment:

Go to the Deployments Record Manager for the relevant AI use case.

To update an existing deployment, click the More options (vertical ellipsis) next to the deployment name and select Edit Pipeline.

– Alternatively, you can create a new deployment and add Guardrails during configuration. Refer to the Configure Deployments for detailed steps.In the pipeline editor, select the Prompt Node you want to configure. The Prompt Configuration page opens.

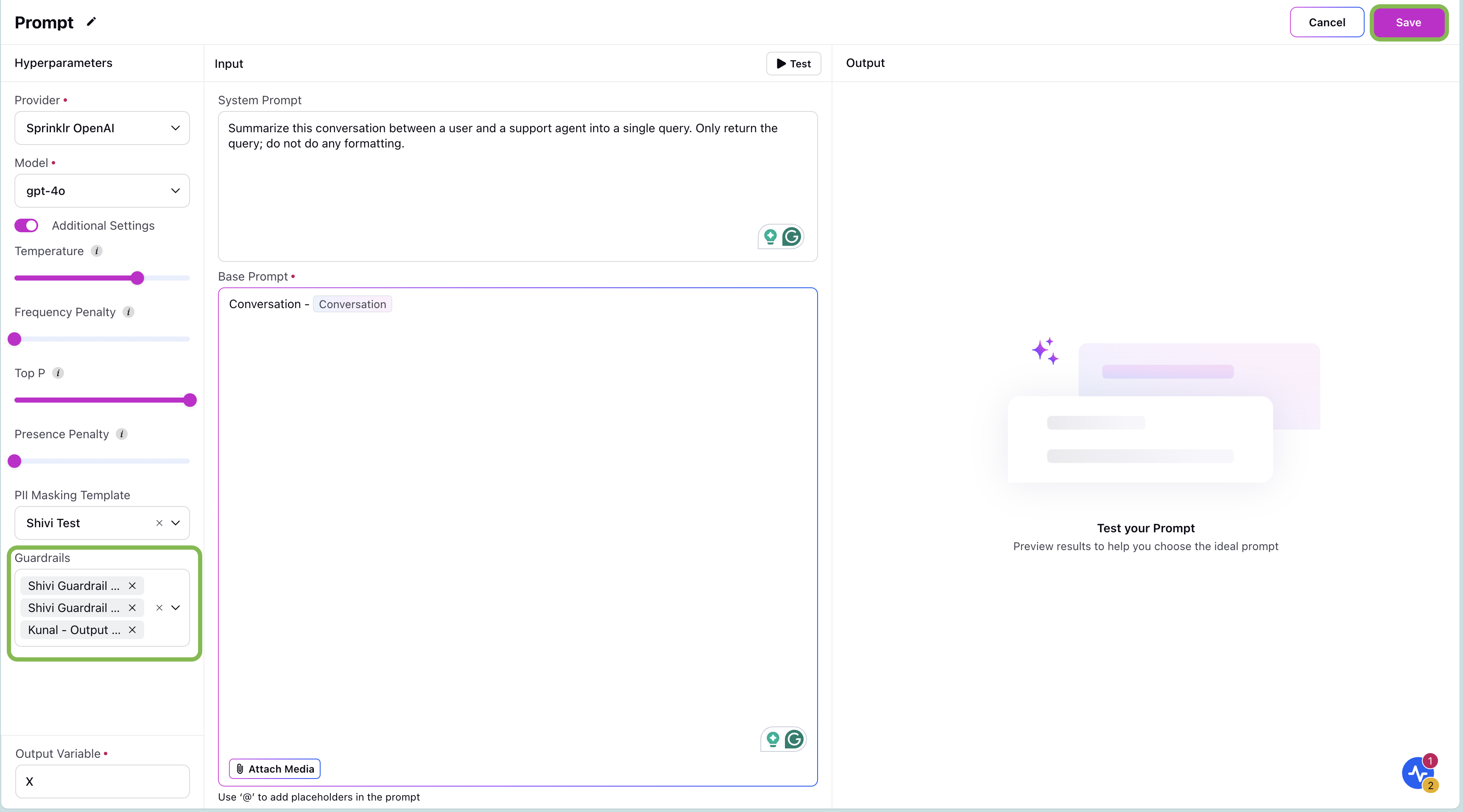

In the Settings pane, choose the Guardrails you want to apply.

– You can select one or more Guardrails to apply within a single prompt.Click Save to apply your changes.

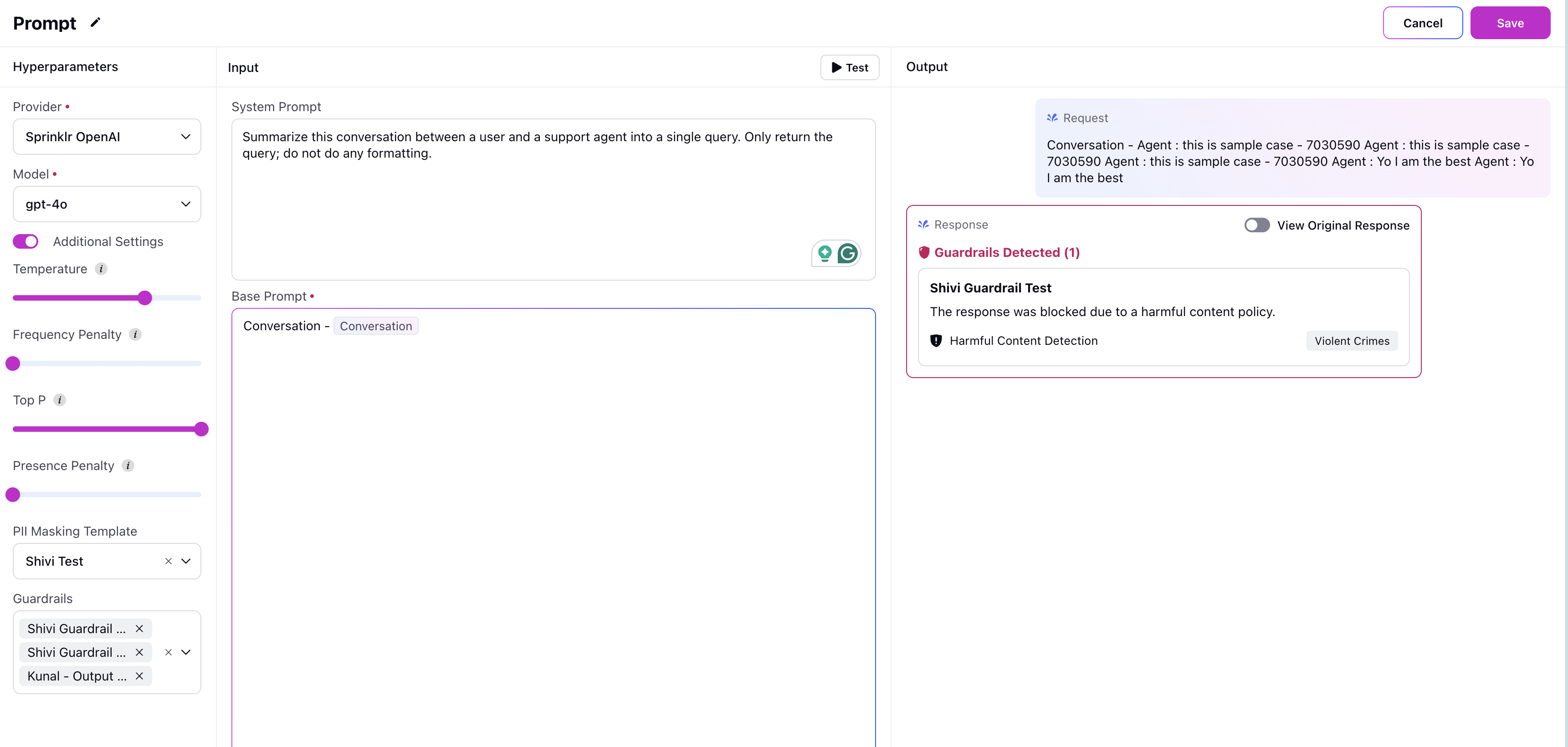

You can test the Prompt Node with Guardrails applied. If the system detects harmful content, an error message will appear in the Output pane, as shown in the image below.

Tip: Using multiple Guardrails ensures better coverage and stricter control over AI-generated content.

Guardrails in AI+ Studio provide a powerful and flexible way to enforce responsible AI behavior by detecting and blocking harmful or policy-violating content. By configuring harmful content types, word filters, and deployment-level controls, you can ensure your AI workflows remain safe, compliant, and aligned with organizational standards.