The global leader in enterprise social media management

For over a decade, Sprinklr Social has helped the world’s biggest brands reimagine social media as a growth driver with a unified platform, industry-leading AI and enterprise-grade scale.

Why Social Media Moderation Matters in 2025 (And How Brands Can Do It Right)

Here’s the reality in 2025: Social media moderation is now risk management. One fake review, deepfake post, or rogue comment can spiral globally in hours, and take your brand’s reputation (and stock price) with it.

The issue? It’s not just the sheer volume — 5.31 billion users crank out content non-stop across platforms, regions, and languages. That velocity means risks multiply fast, especially for global brands.

And regulation is catching up. The EU’s Digital Services Act is triggering age‑verification pilots and live probes; the UK’s Online Safety Act demands risk-related and algorithm governance under threat of £18M or 10% turnover; meanwhile, the AI content tsunami (deepfakes and misinformation) now spreads six times faster than factual content.

The solution? Treat moderation like infrastructure, not afterthought.

And this guide shows you how; breaking down how leading enterprises are scaling social media moderation with instinctive AI‑human balance, imposing governance, proving ROI, and building systems that actually work.

What is social media moderation and why brands must prioritize it

Social media moderation refers to the systematic governance of user-generated content across a growing spectrum of social platforms and formats, each with its own risks and rules.

Consider this:

- TikTok: A video-first, algorithm-driven firehose where trends, misinformation, and "algospeak" propagate in minutes.

- LinkedIn: Text-heavy with a professional tone, yet personal posts and subtle misinformation pose risks to brand credibility.

- X (formerly Twitter): Real-time commentary zones that can spiral into crisis if left unchecked.

- Instagram Stories, DMs, closed groups: Ephemeral or private by design, but still capable of harboring harmful content, without public visibility or audit trails.

Each platform poses different risks; which means a single moderation strategy won’t cut it. What works on LinkedIn won’t work on TikTok or inside a private group chat. You need AI tuned for video formats, human review for nuanced text posts, and clear governance protocols for harder-to-monitor spaces like DMs and Stories.

What this really means is that social media moderation has become an operational discipline, not just community management. It’s about managing brand interactions at scale, across different ecosystems, in real time.

Also read: Community Moderation: 5 Best Practices and Successful Strategy Tips

Importance of social media moderation

Here’s the thing: moderation is no longer about controlling conversations; it’s about controlling risk.

In 2025, social media failures don’t just trigger PR crises; they expose enterprises to legal penalties, operational disruption, and shareholder scrutiny.

Recent enforcement and public blow-ups reveal what’s at stake:

- The EU charged TikTok under the DSA for misleading advertising practices and launched algorithmic and age-verification probes.

- TikTok was fined €530 million for GDPR violations tied to data handling and content governance.

- Meta scrapped its U.S. fact-checking program, shifting to crowd-sourced “Community Notes” — a move EU regulators flagged as a potential DSA violation.

- Brands like Bud Light and Starbucks have suffered real financial hits tied to unmoderated social controversies: Bud Light saw a nearly 28% drop in U.S. sales after its influencer misstep sparked a trans-led social media backlash. Similarly, Starbucks saw a $11 billion market-cap decline after a union-affiliated pro-Palestinian post went viral and sparked boycott calls.

These aren’t isolated PR headaches; they’re systemic risks that play out across four business-critical areas:

🚩 Regulatory risk: Fines, lawsuits, and market restrictions are on the table if brands violate content governance laws like the EU DSA, UK Online Safety Act, Singapore’s Online Safety Amendments, and India’s IT Rules.

🚩 Brand and reputation risk: Misinformation or hate speech can spiral into global headlines overnight; costing millions in crisis control and damaging long-term equity.

🚩 Stakeholder backlash: Failure to enforce ESG and DEI commitments online triggers employee protests, whistleblower leaks, and shareholder activism.

🚩 Operational fallout: Platforms penalize brands for weak moderation with content throttling, account suspensions, or ad bans; leading to workflow disruptions and governance questions from the board.

The bottom line? Moderation is a core pillar of brand safety, corporate governance, and regulatory compliance.

Good to know: Social Media Crisis Management: 6 Proven Strategies for 2025

How to build an AI-human hybrid social media moderation system

Pure automation moves fast; but it still fails in key areas that require human context — like understanding tone, satire, coded language, or escalating serious issues in real time. A human-only model, meanwhile, can’t keep up with the scale or speed of today’s platforms.

The limitations of AI-only moderation systems:

- Cultural nuance and satire: AI often misclassifies sarcasm or fails to grasp region-specific language.

- Bias and coded language: Subtle discrimination or dog whistles can slip past detection algorithms.

- Over-enforcement: AI may overcorrect, removing critical feedback or fair user content as violations.

- Escalation blind spots: AI lacks the judgment to know when to involve legal, PR, or crisis teams.

Learn More: AI in Social Media: How to Use it Wisely

That’s why top brands use hybrid models. You get the speed of AI, the accuracy of human insight, and the safety net of escalation. That equals stronger ROI, fewer legal hits, and better brand control. Trust & Safety experts affirm that “effective content moderation is a combination of humans and machines”.

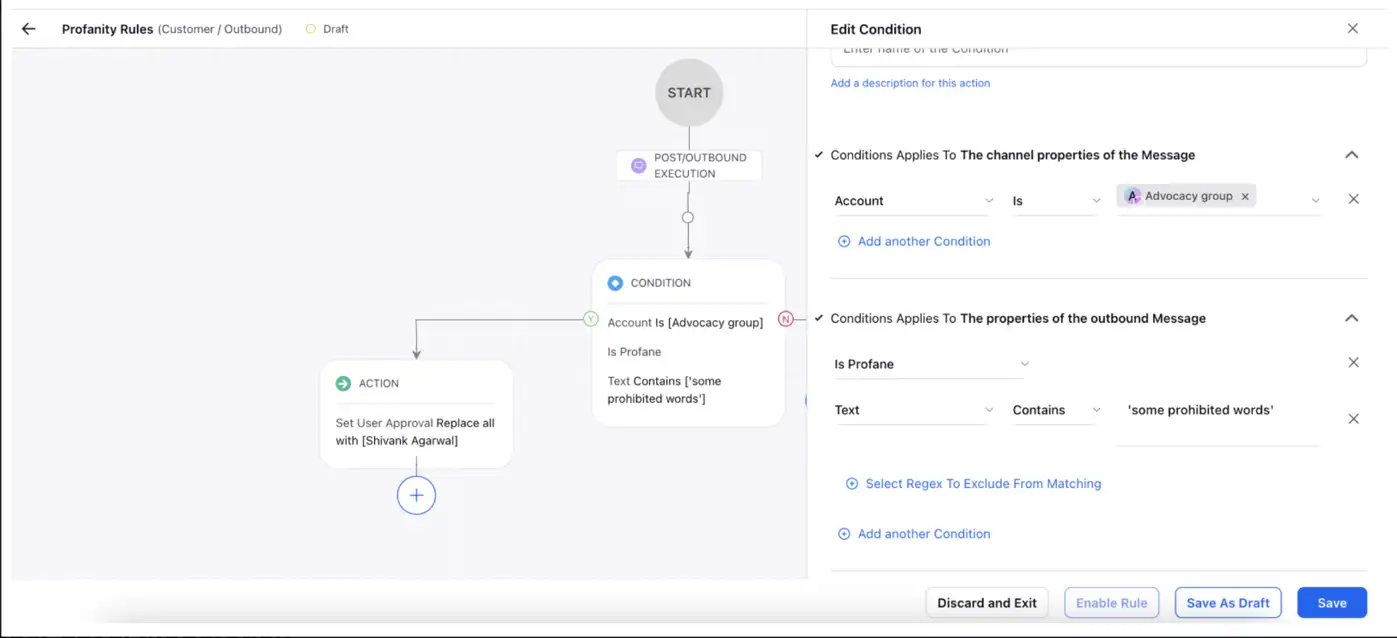

💡 Pro Tip: When it comes to effective content moderation, Sprinklr doesn’t miss. You can set up profanity filters, create custom escalation paths, and keep everything aligned with your brand’s policies, across every channel. It’s built to catch the stuff that slips through the cracks and keep moderation consistent at scale.

Here’s a quick look at how profanity filters work in Sprinklr →

And if you’re ready to see it in action, Sprinklr Social’s free demo is just a click away. 👇

A three-tiered framework for hybrid social media moderation

A scalable, risk-aware moderation system needs three distinct layers — each designed to catch what the others can’t.

1. AI pre-screening

The first layer uses AI to review and filter content at scale, scanning in real-time for clear-cut policy violations like hate speech, explicit imagery, or spam. These are automatically removed to reduce exposure and lighten the human load.

What this layer handles well:

- Obvious infractions with low ambiguity

- Real-time classification across languages and regions

- Pattern recognition and trend detection

How Sprinklr helps:

- Smart Response Compliance checks every outbound reply for profanity, bias, or off-brand tone — flagging anything that could escalate risk.

- Media OCR Compliance scans visual content (like memes or screenshots) to detect banned keywords hidden in images or video transcripts.

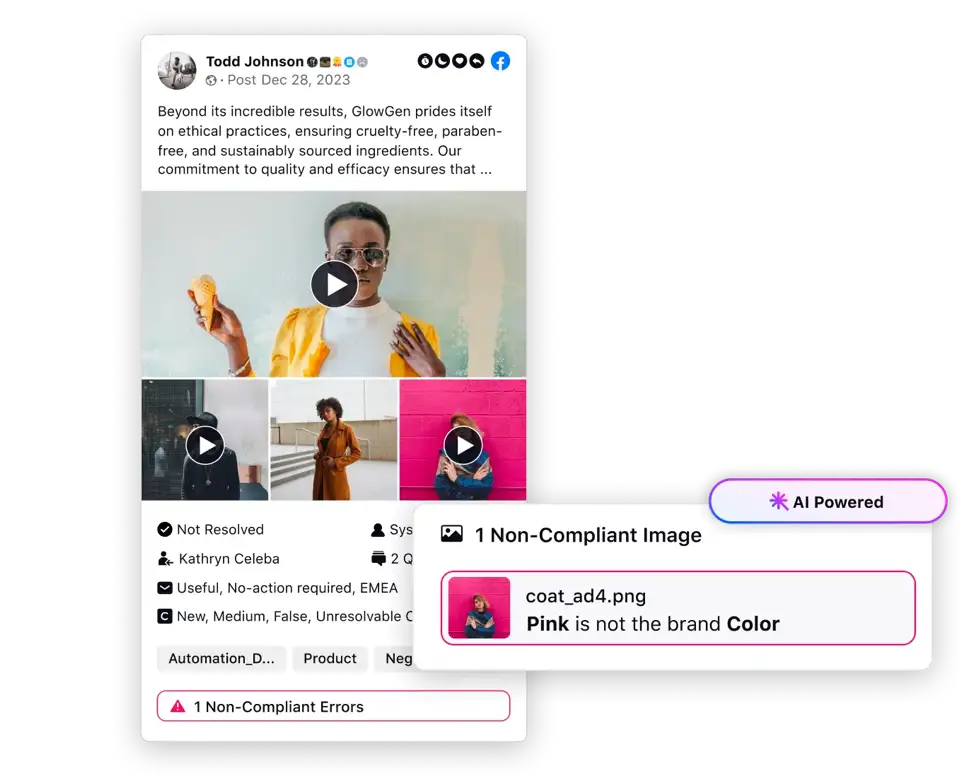

- Smart Approvals uses AI to assess social content quality pre-publish — catching NSFW images, low-res visuals, or missing brand elements before they slip through.

- Logo Detection ensures brand assets are being used correctly or alerts you when they’re misused externally.

- NSFW Detection flags inappropriate visual content and applies auto-blurring where needed — reducing manual exposure to harmful imagery.

- The Spam feature of Social listening platform identifies and filters out irrelevant or malicious messages from social listening data, helping teams focus on real signals.

Flagged content with potential gray areas is routed to the next layer for human review.

2. Human moderation

AI can sort, but humans decide. This middle layer is where moderators bring cultural knowledge, empathy, and judgment to the table, especially for borderline cases that social media algorithms can’t reliably classify.

Human moderators handle:

- Satire, political or religious content, and local slang

- Sensitive brand-related complaints or crises

- Tone-based misfires like jokes that may read as threats

How Sprinklr helps:

- Sprinklr’s ad comment moderation lets brands manage ad comment workflows from a unified dashboard. You can set rules to hide, delete, route, or flag comments based on keywords or sentiment; helping human moderators make policy‑aligned decisions efficiently.

- Intuition Moderation under Sprinklr Social automatically labels inbound social messages as Engageable or Non-Engageable, helping agents focus on what really matters.

- AI Summarizer aggregates and categorizes high-volume comments by topic, sentiment, and urgency — flagging critical ones for human review.

- Response Quality of Customer Feedback Management helps filter out low-quality or irrelevant open-text survey responses, so teams don’t waste time on noise.

- NSFW Detection and Media OCR Compliance also support human workflows by showing exactly where flagged risks appear — reducing guesswork and review time.

This layer ensures that moderation remains human, fair, and adaptable to evolving norms.

3. Legal and communications escalation

Some content has legal, regulatory, or reputational consequences that require more than moderation — they demand action. This third layer is built to identify and handle high-stakes situations swiftly and with authority.

Escalation is triggered by:

- Threats of violence or self-harm

- Regulatory violations (e.g., HIPAA, GDPR, political content laws)

- Sensitive issues involving discrimination or public outrage

- Viral brand-damaging content or high-profile user backlash

How Sprinklr helps:

- Brand Reputation Dimensions, which track key risk areas like ethics, legal, labor, or financial performance — so issues don’t get lost in the noise.

- Intuition Moderation of Sprinklr Insights, which categorizes high volumes of listening data and filters for relevance — so analysts can zoom in on real threats, not background chatter.

- Smart Approvals and Smart Response Compliance also generate audit trails that support internal compliance, governance reporting, and continuous improvement.

This layer connects moderation to broader enterprise risk management.

Also read: How Generative AI Can Help Brands Better Anticipate Risks

Success story: How 3M scaled content moderation across 70+ countries

3M doesn’t do anything halfway — not in product innovation, and definitely not in customer experience. But before Sprinklr, social moderation was fragmented across full-time teams, contractors, and agencies. Inconsistent processes meant regional teams struggled to scale, response times lagged, and valuable conversations slipped through the cracks.

Sprinklr helped 3M unify its global moderation model across organic and paid, with one integrated system that supports 21 languages and connects 500+ social accounts and thousands of ad accounts. The result?

- 90% reduction in case response time

- 75% reduction in SLA breaches

- Real-time escalation, insights, and optimization

AI-powered translations replaced weeks-long delays from external agencies. Ad comment moderation tied back to campaigns to drive measurable ROI. Sprinklr’s moderation team even helped surface UGC to replace costly content production and build community faster.

This is what enterprise-grade, scalable moderation looks like.

When to automate vs. manually review vs. escalate

Making the right decision between social media automation and manual intervention is critical for social media moderation. The following principles can guide best practices:

Content type | Recommended action | Ownership level |

Spam, explicit content | Auto-delete | AI |

Borderline hate, insult | Manual review | Human moderator |

Threats, self-harm content | Immediate escalation | Legal/comms team |

Satire, sensitive topics | Contextual interpretation | Human moderator |

Criticism or complaints | Allow/review unless harmful | Human moderator + CRM |

This mix ensures you protect the brand while respecting free speech and nuance.

Tech stack for social media moderation

It’s tempting to think you need a dozen tools stitched together to moderate content at scale. AI for detection, dashboards for workflows, APIs for integrations. But here’s the truth: complexity kills speed. And the more tools you bolt on, the more risk you actually create.

What you really need are three core capabilities — delivered in a single, unified platform:

- AI that sees what matters: Sprinklr AI (which includes Intuition Moderation, Sentiment Detection, Smart Compliance) handles real-time classification, content filtering, sentiment analysis, and compliance checks across languages and media types — so your team isn't overwhelmed with noise.

- Workflows that keep teams in sync: Custom dashboards centralize flagged content, AI‑powered routing, custom policy workflows, and audit logs; all within one interface. Teams can triage and escalate cases efficiently, without hopping between tools.

- Built-in integrations that close the loop: Sprinklr connects natively with CRMs, CX platforms, legal systems, analytics tools, and social APIs. Collaboration between care, compliance, and marketing happens in-platform — so risky content automatically escalates and gets resolved in context.

One platform. Real-time governance. No tech sprawl.

💡 Pro Tip: Sprinklr Social helps you stay compliant before content goes live — using AI to auto-flag tone, visuals, and policy issues at the pre-publish stage. This reduces reputational risk and ensures alignment with internal guidelines at scale.

The hidden costs of poor content moderation on social media

Poor moderation isn’t just a social team problem. When bad content slips through, or good content gets wrongly flagged, the financial, legal, and operational fallout ripples across the business. Here are six hidden cost centers leaders often overlook:

1. Crisis fallout from delayed takedowns

Leaving harmful content up for even a few hours can ignite PR disasters, shareholder pressure, lawsuits, or regulatory investigations.

- Impact: Revenue loss in damage control per major crisis.

- Risk: Shareholder pressure, legal exposure, and long-term trust erosion.

- Blind spot: Lack of automated escalation or takedown triggers.

2. Revenue loss from rejected or suppressed content

Policy violations during campaigns, like ad disapprovals, halt performance instantly.

- Impact: Damaged ROI, stalled funnels, suppressed reach.

- Risk: Missed targets, weakened agency relationships.

- Blind spot: Most teams rarely pre-flight content against evolving platform rules.

3. Moderator burnout and attrition

Consistent exposure to toxic content wears teams down fast.

- Impact: High attrition annually in trust and safety roles.

- Risk: Talent loss, HR liabilities and wellness claims.

- Blind spot: Few organizations link exposure metrics to staffing and retention planning.

4. Manual rework and slow workflows

Lack of automation causes repetitive review, delayed approvals, and missed deadlines.

- Impact: Loss of several hours/month in duplicated effort across teams.

- Risk: Campaign delays, resource drain, HR liabilities, wellness claims.

- Blind spot: Escalation paths are often informal, unclear, or undocumented, causing missed deadlines and inconsistent policy application.

5. Regulatory exposure from delayed or mishandled flags

Regimes like the EU’s DSA allow fines up to 6% of global turnover for non-compliance. Such enforced content takedowns are now matched by India and Brazil — India’s IT Rules require removal within 24–36hours of notice, while Brazil’s Supreme Court mandates proactive takedowns of illegal posts even without court orders. Miss the SLA, and you could face serious fines.

- Impact: Financial penalties, formal regulatory action, platform de-ranking.

- Risk: Investigations, platform demotion, loss of legal safe harbor.

- Blind spot: Most systems aren’t integrated with compliance-driven takedown timers.

6. Platform penalties & brand safety risks

Poor content oversight can lead to algorithmic downgrades, demonetization, or shadowbans.

- Impact: Lost ad spend, diminished visibility, flag-to-monetization hits.

- Risk: Reduced market reach, costly performance impact.

- Blind Spot: Metrics like ad quality scores and trust signals usually go unmonitored.

Bad moderation is a silent tax on growth. When left unchecked, it chips away at revenue, brand equity, compliance, and team morale. Building moderation into your governance model is essential for resilient, trust-driven growth.

Also read: How to Ensure Brand Governance in Social Media Advertising

How enterprise teams manage social media moderation workflows

In most large organizations, moderation tends to break down not because teams aren’t doing their jobs, but because no one’s sure who owns what. Legal points to Ops. Ops loops in Comms. Marketing waits on Risk. Meanwhile, flagged content just sits there.

Without a shared governance model, three patterns emerge:

- Policy drift: Different teams interpret the same rule differently across markets or campaigns. That inconsistency can lead to regulatory risk, or public backlash.

- Escalation confusion: No clear chain of command means sensitive content bounces between departments. Reviews stall. Delays mount.

- Reactive layering: Once a crisis erupts, Legal, Risk, and Comms scramble, usually too late.

The problem isn’t intent. It’s structure.

What fixes this? Structure and accountability. ⬇️

1. Introduce a cross-functional RACI matrix

A RACI (Responsible, Accountable, Consulted, Informed) model assigns clear roles for each content category and moderation action. This removes ambiguity across different teams. Principles to follow:

- Each task has at least one R and only one A

- Keep stretch to ~8–10 core tasks per matrix

Example RACI for moderation:

Task | Marketing | Legal | CX/Ops | Risk | IT/Platform |

Review flagged campaign ads | R | A | I | C | I |

Misinformation content takedown | C | A | R | C | I |

Crisis post-escalation | I | A | R | C | I |

Whistleblower or internal-issue logs | I | A | C | R | I |

Platform-compliance readiness | R | C | I | C | A |

A living RACI ensures consistency across regions and content types, and keeps roles updated as platforms or policies change.

2. Form a moderation governance council

To close the accountability loop, enterprises should formalize a moderation governance council. Composed of leaders from Legal, Risk, Marketing, IT, and CX, this group:

- Sets thresholds and response protocols

- Sharpens policies based on platform/regulatory updates

- Owns escalation for high-risk content, acting before issues go public

- Maintains the RACI matrix as a live governance artifact

A unified workflow built on a real-time RACI model and governed by a cross-functional council ensures consistent decisions, faster response times and full audit traceability.

Conclusion

In 2025, social media moderation is about protecting your brand, your people, and your ability to operate in public. With governments tightening rules and reputational blowups becoming weekly news, brands can’t afford to wing it anymore. AI helps, sure. But without real systems, real accountability, and real-time oversight? It’s just guesswork at scale.

Sprinklr changes that. It helps you define custom moderation rules, automatically route escalations based on role-based permissions, and track every action through a searchable audit trail. And because it all runs on a single platform, your legal, CX, risk, and comms teams can move in lockstep — no Slack threads or “who owns this?” confusion. With robust integrations into broader systems and third-party workflows, moderation becomes part of your governance backbone, not a standalone process.

If moderation still feels like a side task, it’s time to treat it like the enterprise function it actually is. Request a demo to see how Sprinklr helps you make that shift; confidently and at scale.

Frequently Asked Questions

Key 2025 regulations include the EU Digital Services Act (DSA), India’s IT Rules 2021 (amended) and several upcoming US state-level laws. They call for faster takedowns, transparency in moderation decisions, and auditability for high-risk platforms.

Clear, platform-agnostic policies grounded in principles, like protecting speech while eliminating hate, misinformation, and legal risk, are critical. Apply consistent thresholds for action across customer comments, ads, and UGC. Human oversight in ambiguous cases helps preserve tone and intent while removing harmful content.

Enterprise-grade AI-native platforms like Sprinklr Social, Brandwatch, and TrustLab offer AI-powered text and image moderation, keyword and sentiment logic, plus workflow automation. These tools flag suspect content and route it through human review or escalation queues, reducing manual load and speeding resolution.

Highly regulated or public-facing sectors face the greatest fallout from moderation failures, including finance, healthcare, government, retail and CPG, and tech and telecommunications industries. These sectors are exposed to risks like regulatory fines, brand trust erosion, customer harm, and legal liability from unmoderated or mismanaged content.

Yes, AI models can be fine-tuned for brand-safe, policy-aligned moderation. Labeled data, scenario-based training, and human-in-the-loop feedback are some ways to do so.