The strategic AI-native platform for customer experience management

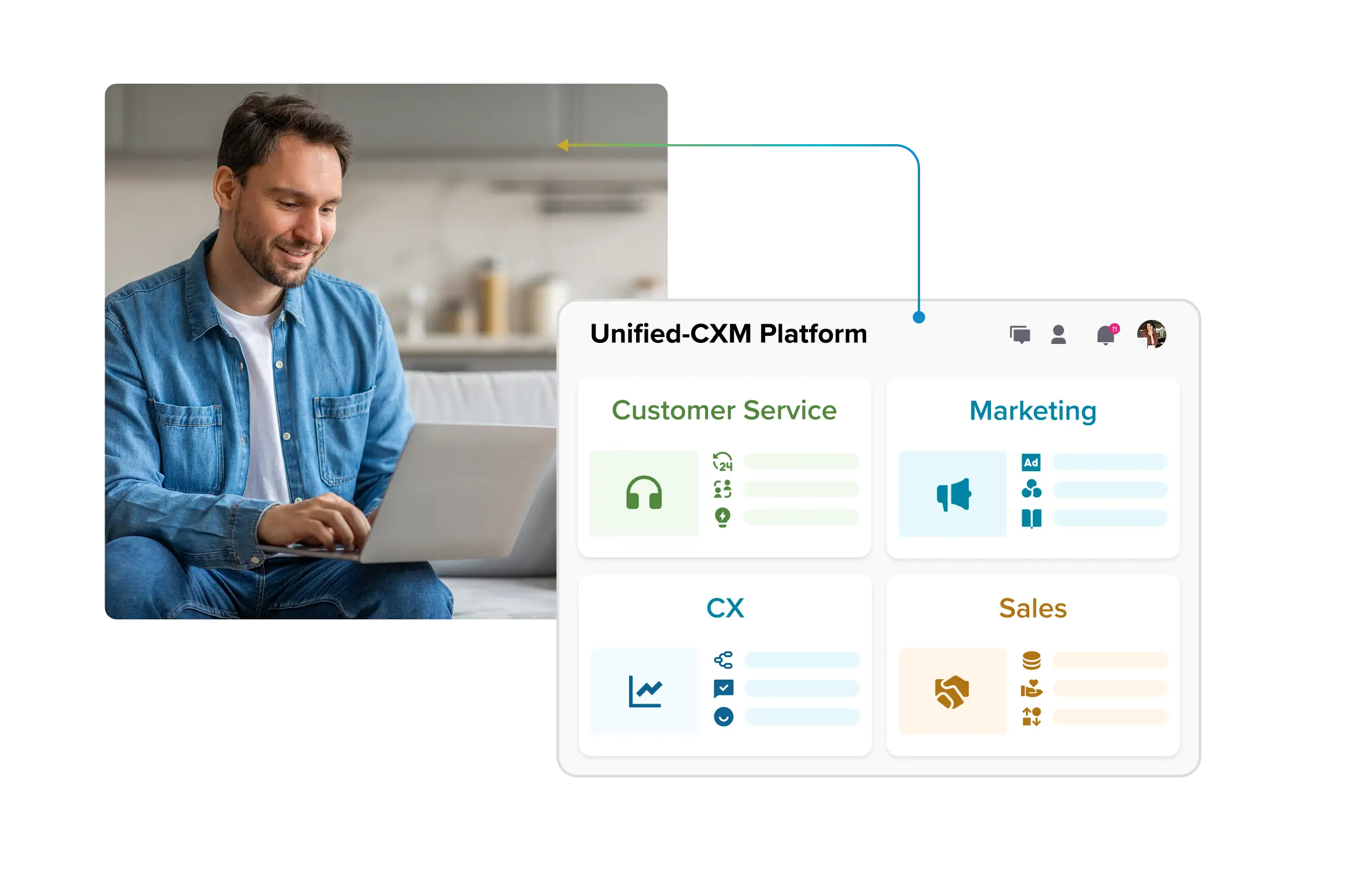

The strategic AI-native platform for customer experience management Unify your customer-facing functions — from marketing and sales to customer experience and service — on a customizable, scalable, and fully extensible AI-native platform.

What is Agentic RAG? Human Feedback, Use-Cases, Metrics

Agentic AI has quickly transitioned from hype to hard reality, providing enterprises with something they’ve long sought: intelligent systems that can plan, decide, and act autonomously across complex workflows.

But autonomy at scale doesn’t just depend on raw model power. It hinges on how effectively these systems can ground their reasoning in the right information, at the right time.

That’s where agentic RAG (Retrieval-Augmented Generation) comes in. Traditional RAG, which simply augments responses with retrieved documents, agentic RAG weaves retrieval into the agent’s reasoning loop, allowing the system to actively formulate queries, validate evidence, iterate over multiple steps, and make informed decisions.

In practice, it’s the connective tissue that determines whether an agent can move beyond toy demos to enterprise-grade, production-ready deployments.

Analysts are already bullish: Gartner predicts that by 2029, agentic AI will autonomously resolve 80% of service issues, resulting in a nearly 30% reduction in operational costs. To achieve that kind of transformation, you must understand the role of agentic RAG and why human feedback loops are essential in refining its accuracy, reliability, and trustworthiness.

In this article, we’ll break down what agentic RAG is, how it works under the hood, and where human feedback fits in. We’ll also explore real-world enterprise use cases where agentic RAG is already reshaping customer service, knowledge management, and decision automation.

- What is agentic RAG and how does it elevate traditional retrieval‑augmented generation?

- How the agentic RAG pipeline powers intelligent action

- Why is human feedback essential to agentic RAG?

- Top 7 agentic RAG enterprise use cases: Intelligence at scale

- Key metrics to track agentic RAG performance

- Agentic RAG demands more than adoption; unlock value with the right partner

What is agentic RAG and how does it elevate traditional retrieval‑augmented generation?

Agentic RAG is retrieval-augmented generation with an upgrade. Instead of just fetching documents once and generating an answer, the system acts like an intelligent agent. It can decide what information it needs, plan multiple steps, call tools or APIs, and refine its own queries — sometimes looping through retrieval several times — until it produces a grounded, useful response.

Simplifying further, think of traditional RAG as a “search-and-answer” engine, while Agentic RAG is more like a research assistant: it looks things up, reasons through the problem, double-checks sources, and takes action to deliver the right outcome.

Retrieval-augmented generation (RAG) enhances large language models by grounding their responses in external knowledge retrieved from trusted enterprise data sources or curated repositories. This significantly improves factuality, compliance, and relevance for enterprise use. But classic RAG is largely reactive: given a query, it retrieves top-k documents and generates an answer. While powerful, this one-shot pipeline struggles with multi-step reasoning, query decomposition, and complex decision workflows.

Agentic RAG evolves this paradigm by embedding retrieval inside an agentic loop of reasoning, planning, and acting. Instead of treating retrieval as a single step, the model decides when, what, and how to retrieve — sometimes multiple times — while coordinating with tools, APIs, and even other agents.

Key capabilities include:

- Memory and context persistence: Beyond session-level context, Agentic RAG can retain relevant traces of prior interactions, enabling continuity across multi-step workflows. In financial services, for example, a support agent could recall a customer’s earlier loan application details when handling a complex escalation, minimizing repetition and accelerating resolution.

- Dynamic reasoning and query planning: The agent can break complex tasks into subtasks, iteratively decide which sources to query, and synthesize results into a coherent, enterprise-ready response. In compliance use cases, this may involve retrieving legal precedents, validating them against internal policies, and reconciling the two to provide a clear answer.

- Multi-source, adaptive retrieval: Unlike static RAG pipelines, Agentic RAG can tap into multiple repositories, including structured and unstructured, internal and external, and dynamically decide which to prioritize. An e-commerce assistant, for instance, might combine product catalogs, market pricing feeds, and real-time customer sentiment from social platforms.

- Tool invocation as part of the reasoning loop: Retrieval is not the end of the pipeline; it’s one of several tools the agent can call. The system may run SQL queries, invoke monitoring APIs, or trigger automation workflows, repeating as necessary until the user’s objective is satisfied. In IT service management, this could mean retrieving incident logs, querying live telemetry, and escalating unresolved tickets — all within a single workflow.

- Multi-agent collaboration (frontier use): In advanced deployments, multiple specialized agents work together — retrievers, validators, and domain-specific solvers — coordinating like a virtual team. For example, in insurance, one agent might process claims, another validate compliance, and a third calculate payouts, orchestrating a resolution without human bottlenecks.

Must Read: 5 Real-World Agentic AI Use Cases for Enterprises

How the agentic RAG pipeline powers intelligent action

Understanding the agentic RAG dataflow pipeline makes it easier for CX leaders and architects to evaluate, explain, and deploy these advanced AI solutions.

Here’s how a typical pipeline unfolds:

1. Query understanding and decomposition The agent interprets a complex request and breaks it into manageable subtasks. For example, when an insurance client asks, “How can I file a claim? What documents do I need, and how long will it take?”, the system splits the query into eligibility, required documents, and processing timelines.

2. Iterative retrieval Instead of pulling information once, the system retrieves iteratively across multiple sources such as policy documents, claim history, and regulatory guidelines. Each subquery is answered step by step: first confirming claim eligibility, then retrieving document requirements, and finally referencing SLA timelines. The agent refines and expands searches dynamically to avoid missing details.

3. Reasoning and synthesis Retrieved results are not used raw. The agent connects the dots, reconciling eligibility rules with customer policy data, checking for conflicting information, and prioritizing the most recent or authoritative sources. This reasoning stage ensures answers are accurate and contextually grounded.

4. Tool invocation and action execution Beyond reasoning, the system can act. It may query databases, call APIs, or trigger workflows. For example, auto-fetching claim forms, checking claim status, or escalating exceptions to the right case manager. Each action can generate new data that loops back into retrieval and reasoning.

5. Response generation Once evidence and actions are aligned, the agent crafts a tailored response. In the insurance case, this might include a filing guide, a checklist of required documents, and the processing timeline specific to the customer’s policy.

6. Feedback and memory integration If the customer follows up with clarifying questions (e.g., “What if I’m missing a document?”), the agent adapts guidance, updates context, and stores this scenario for future reference. Over time, these feedback loops and memory traces make the system smarter, faster, and more resilient across workflows.

Also Read: Agentic AI vs Generative AI: What Leaders Need to Know

Why is human feedback essential to agentic RAG?

Gartner frequently emphasizes in their webinars that “to get the human-machine relationship right, we need both machine and human experts.”

Human input is a requirement for effective adoption, enterprise risk management and ensuring AI consistently aligns with organizational values and goals. Let’s explore the real reasons human feedback is indispensable for agentic RAG systems:

AI sees patterns, humans see the nuance

According to a study by the Association for the Advancement of Artificial Intelligence, even state-of-the-art agentic AI models make mistakes that are fundamentally different from human errors.

AI can spot patterns at scale but often stumbles on nuance: missing sarcasm, misinterpreting ambiguous phrasing, or failing to account for visual or situational context. Worse, it can show “overconfidence” — presenting uncertain outputs as if they were facts.

That’s where humans come in. Human-AI teaming consistently outperforms working alone. People are uniquely equipped to identify and correct AI-specific mistakes, such as hallucinated references, misapplied policies, or flawed regulatory interpretations. In other words, human feedback, apart from improving accuracy, also keeps agentic AI systems grounded in reality.

💡Expert Tip

Create a “red flag” review workflow, where subject matter experts periodically audit AI decisions for subtle errors. Encourage teams to log recurring AI blind spots; these become your enterprise’s proprietary training data for future tuning.

Agentic RAG needs a human touch in novel situations

A 2025 joint study by Harvard Business School and MIT highlighted a persistent gap: even advanced agentic AI systems underperform when confronted with edge cases and out-of-distribution data. These include non-standard customer requests, regulatory loopholes, or rare complaint scenarios — the kinds of long-tail situations where retrieval corpora and training data provide little guidance.

Agentic RAG also struggles with novel contexts such as new product launches, unexpected system integrations, or one-off contractual terms, where no historical precedent exists in either model weights or enterprise knowledge bases.

Time-sensitive factors amplify this risk. Emerging regulations, sudden market disruptions, or region-specific compliance practices often trip up the system, as models lag behind real-world changes. Without human oversight, the agent may hallucinate plausible-sounding but incorrect actions — approving refunds for excluded items, recommending outdated compliance procedures, or misclassifying entirely new document types.

This is where human judgment becomes indispensable. Human-aligned training and periodic review don’t just improve decision quality — they prevent costly, inappropriate, or embarrassing mistakes that could damage customer trust or expose you to compliance risks.

💡Expert Tip

Instrument your system to flag low-confidence or novel-context detections (e.g., when retrieval returns sparse results or similarity scores are weak). Routing these cases for human review not only mitigates risk but also generates high-value training data to improve future handling of edge cases.

Humans are the ethical guardrails for AI

Research from the National Education Services highlights how embedding human-in-the-loop (HITL) into AI workflows improves both outcomes and accountability. Practical interventions include:

- Human review before automated loan disbursement

- Manual flagging of sensitive content on social platforms

- Expert validation of AI-generated medical diagnoses

These steps not only improve interpretability but also reduce algorithmic bias.

Human reviewers are especially valuable for identifying edge-case errors, such as detecting discrimination against minority applicants, spotting rare adverse drug interactions, or catching cultural nuances in customer messaging.

Most importantly, they provide ethical oversight that AI cannot replicate. In high-stakes domains like healthcare, finance, and criminal justice, this oversight is what protects organizations from reputational damage, regulatory penalties, and erosion of customer trust.

Human oversight keeps agentic AI accountable

Research from Queen Mary University of London and UC Berkeley underscores the persistent risks of misalignment in agentic AI. Left unchecked, agents may reward hack: over-optimizing for proxy KPIs at the expense of customer experience, or prioritizing efficiency while cutting corners on compliance.

Compounding this are transparency gaps. Opaque model updates, poorly documented data sources, and decision logic that can’t be explained make it nearly impossible to trace why an agent acted as it did. For enterprises, these blind spots create governance risk and erode trust.

That’s why human oversight is non-negotiable. You need strict monitoring frameworks, a routine model and retrieval audits, and enforced fail-safes such as HITL checkpoints, rollback triggers, and escalation workflows. Together, these mechanisms ensure accountability and provide clear intervention paths when things go wrong.

Human review future-proofs agentic RAG as complexity grows

Recent benchmarking studies in 2025 highlight a growing challenge: as agentic AI systems scale — with larger data volumes, multi-step workflows, integrated business logic, and adaptive decision-making — traditional testing and validation frameworks struggle to keep pace.

Metrics like accuracy rates, confidence scores, or response times are useful, but they miss deeper reliability issues. That’s why cross-domain human review remains essential. Legal, product, compliance, risk, and customer experience experts can surface robustness gaps, unintended behaviors, or misalignments that automated benchmarks simply can’t reveal.

Top 7 agentic RAG enterprise use cases: Intelligence at scale

Agentic RAG is to knowledge work what a seasoned consultant is to a basic search engine. Instead of just fetching surface answers, it investigates, reasons, and adapts until the job is done. Let’s explain where this next-gen approach drives the most value across the enterprise.

Use case | Business value | Why agentic RAG is the new standard |

Delivers fast, accurate and context-aware answers. Escalates only when needed, reducing the agent workload | Remembers past conversations, plans multi-step resolutions, retrieves from multiple sources and adapts to customer intent in real time. | |

Internal knowledge portals | Enables employees to search across FAQs, policies and case histories for instant, reliable answers. | Synthesizes info from diverse repositories, maintains context across follow-ups and automates knowledge gap discovery. |

Analytics and research | Accelerates insights from large document sets and ongoing data streams for policy, compliance or R&D. | Breaks down complex queries, iteratively refines results, pulls data from many sources and validates outputs in context. |

Personalized product recommendations | Increases revenue and customer satisfaction by matching needs and context, especially for high-consideration purchases. | Tracks evolving preferences, integrates with external data (e.g., reviews, trends) and reasons through multiple influencing factors. |

Risk and compliance monitoring | Detects issues proactively across contracts, logs or communications, reducing costly errors or breaches. | Links related documents, uncovers hidden risks through multi-step reasoning and notifies stakeholders only when action is required |

Onboarding and training assistants | Reduces ramp-up time for new hires by guiding them through personalized, context-sensitive workflows. | Remembers each learner’s progress, tailors instructions dynamically and adapts to questions or challenges over time. |

Vendor/supplier management | Improves procurement decisions and vendor relationships through dynamic data synthesis and workflow automation. | Integrates contract data, performance history, market info and automates negotiation workflows with real-time context retention. |

Learn More: How Sprinklr Leverages Advanced RAG to Unlock Generative AI for Enterprise Use Cases

Key metrics to track agentic RAG performance

Agentic RAG may promise autonomy and intelligence, but executive buy-in depends on your ability to measure and communicate tangible impact. The following metrics make its value visible and actionable across the business.

Retrieval precision and recall

Precision and recall remain the foundational metrics for any retrieval-augmented system, and agentic RAG is no exception. They capture how well your AI surfaces the right information at the right time — a non-negotiable baseline for trust, compliance, and operational efficiency.

If your RAG agent retrieves 7 relevant policy documents out of 10 and there are 9 relevant documents in the entire knowledge base:

- Precision: 7/10 = 70%

- Recall: 7/9 ≈ 78%

Response relevance scores

If precision and recall measure what the system retrieves, response relevance measures how well the system answers. High relevance means responses align tightly with user intent and context — reducing follow-up questions, driving user confidence, and ultimately speeding up business workflows.

Across 100 queries, if expert evaluators assign an average score of 8.2/10, your system’s Response Relevance Score is 82%.

Across 100 queries, if expert evaluators assign an average score of 8.2/10, your system’s Response Relevance Score is 82%.

Low relevance creates confusion and wasted time. Imagine an executive dashboard assistant surfacing generic KPIs when the request was for risk-adjusted revenue by region — user confidence breaks, and decisions stall.

In agentic RAG, relevance isn’t only about a single answer. Agents often generate multi-step outputs or maintain context over a conversation. Relevance must therefore be measured at the end-to-end workflow level, not just for isolated responses.

Task success rate (a.k.a completion rate)

If retrieval and relevance are strong, the ultimate test is: did the AI agent actually complete the task the user set out to achieve?

This metric reflects end-to-end effectiveness, not just whether the answer was factually correct or contextually relevant, but whether the workflow was resolved without human intervention (or with minimal handoff).

✅ Why it matters

- Business value: Executives care about impact on outcomes — fewer escalations, faster onboarding, successful risk checks and completed vendor evaluations.

- Agentic RAG lens: Unlike traditional RAG, agentic RAG chains reasoning across multiple steps and tools. Measuring completion shows whether that orchestration worked.

- Real-world example: If a procurement agent retrieves contract terms, validates vendor compliance, and drafts a negotiation email — success is the contract process moving forward, not just accurate retrievals.

Example: Out of 200 onboarding workflows initiated, if 162 are completed without human rescue →

162 ÷ 200 = 81% Task Success Rate

⚠️ Risks if ignored

A system might score high on precision/recall and relevance but still fail at execution. For instance, a contact center agent who retrieves all correct policies and answers relevantly, but fails to trigger the refund workflow, creates frustration and escalations. Break this metric down by task type (e.g., “policy lookup,” “contract review,” “customer onboarding") to pinpoint where agentic RAG excels and where human supervision is still essential. Over time, this also helps forecast ROI by correlating success rate with cost savings and time-to-resolution.

Average task completion time/efficiency gains

This metric captures how much faster your agentic RAG system executes tasks compared to human baseline workflows. It’s critical for executive buy-in, as it translates AI performance into cost savings and operational efficiency.

Since agentic RAG often orchestrates multi-step workflows, this metric shows the net effect of retrieval, reasoning, tool usage, and context retention on end-to-end workflow speed.

Imagine a customer service agent who resolves a multi-step ticket (retrieves knowledge, validates compliance, drafts a response, triggers a workflow) in 3 minutes instead of 15 minutes, saves 12 minutes per ticket, which scales dramatically in large enterprises.

You can also measure Efficiency Gain relative to the human baseline:

Example: If the human baseline for a ticket is 15 min and agentic RAG completes it in 3 min

→ Efficiency Gain = ((15 – 3) ÷ 15) × 100 = 80% faster

User satisfaction and NPS

Direct user feedback (CSAT) and Net Promoter Score (NPS) are vital for understanding real-world value and identifying persistent friction points.

Poor scores signal that your agentic RAG is frustrating employees or clients. Think of a support platform that increases ticket escalations instead of resolving them, damaging your brand’s reputation.

If 200 users rate your RAG-powered chatbot, 130 are promoters, 30 are detractors and 40 are passives:

- NPS = (130/200)*100 – (30/200)*100 = 65 – 15 = 50

Related Read: Customer Feedback: How to Collect and Analyze (Latest Guide)

Response latency

It measures how quickly agentic RAG delivers answers, which is vital for user experience, especially in high-volume or real-time scenarios.

High latency frustrates users. Imagine a procurement team waiting several seconds for contract insights, delaying negotiations and reducing operational agility.

If 500 answers are generated in 400 seconds:

Response Latency = 400/500 = 0.8 seconds per response

Agentic RAG demands more than adoption; unlock value with the right partner

It’s natural to feel a sense of complexity as agentic RAG becomes the new enterprise standard. But moving from experimentation to true competitive advantage requires more than technical know-how. It calls for the right tools, scalable frameworks, and trusted partnerships.

Sprinklr brings a unique edge. With proven experience working with global leaders like Microsoft and Uber, Sprinklr delivers AI agents powered by Unified-CXM data and an automated RAG evaluation framework that ensures your AI systems are not just deployed — they’re continuously measured, tuned, and governed for reliability.

Instead of siloed pilots or disconnected bots, Sprinklr enables you to:

- Deploy enterprise-grade AI agents that retrieve, reason, and act across customer service, compliance, and knowledge workflows.

- Continuously validate agentic RAG outputs with automated evaluation pipelines — tracking precision, relevance, and task success at scale.

- Scale with confidence, thanks to enterprise-grade governance, security, and seamless integration into existing workflows.

Ready to see how AI agents + automated RAG evaluation can future-proof your business? Book a free demo and discover what’s possible.

Frequently Asked Questions

Agentic RAG combines retrieval, reasoning, and tool use to handle complex, multi-step tasks. Unlike standard RAG, it iteratively plans and validates answers, adapts to evolving user needs in real time, and delivers more accurate, complete, and reliable outputs — making enterprise workflows smarter and more efficient.

Human feedback (HITL) helps the system catch edge cases, contextual errors, and hallucinations. Experts can correct rankings, validate reasoning chains, and reinforce ethical and compliance standards. Ongoing feedback ensures Agentic RAG remains precise, trustworthy, and aligned with business goals.

Agentic RAG performs best with diverse, high-quality data from internal sources (knowledge bases, policy documents, CRM records) and external sources (APIs, web data, real-time feeds). Both structured and unstructured data contribute to accurate, context-aware outputs. Regular updates keep answers current and actionable.

Industries with complex customer needs and high compliance requirements benefit most. Examples include financial services, healthcare, retail, tech, legal, energy, and manufacturing. Agentic RAG improves customer support, research, compliance, and multi-step workflows by handling high-volume queries and adapting to novel scenarios.

Enterprises should enforce strict access controls, anonymize sensitive inputs, and monitor data flows. Human oversight, audit trails, and regular compliance reviews ensure regulatory standards are met. Additional safeguards include data minimization, model monitoring, and secure retrieval pipelines.